ABSTRACT 3D IBFV Hardware-Accelerated 3D Flow Visualization

3D IBFV:Hardware-Accelerated3D Flow Visualization Alexandru Telea?Jarke J.van Wijk?

Department of Mathematics and Computer Science

Technische Universiteit Eindhoven,the Netherlands

A BSTRACT

We present a hardware-accelerated method for visualizing3D?ow ?elds.The method is based on insertion,advection,and decay of dye.To this aim,we extend the texture-based IBFV technique pre-sented in[van Wijk2001]for2D?ow visualization in two main di-rections.First,we decompose the3D?ow visualization problem in a series of2D instances of the mentioned IBFV technique.This makes our method bene?t from the hardware acceleration the orig-inal IBFV technique introduced.Secondly,we extend the concept of advected gray value(or color)noise by introducing opacity(or matter)noise.This allows us to produce sparse3D noise pattern advections,thus address the occlusion problem inherent to3D?ow visualization.Overall,the presented method delivers interactively animated3D?ow,uses only standard OpenGL1.1calls and2D tex-tures,and is simple to understand and implement.

CR Categories:I.3.3[Computer Graphics]:Picture/Image Generation—Display Algorithms

Keywords:Flow Visualization,Hardware Acceleration,Texture Advection,OpenGL

1I NTRODUCTION

Visualization of two and three dimensional vector data produced from application areas such as computational?uid dynamics(CFD) simulations,environmental sciences,and material engineering is a challenging task.Although not a closed subject,2D vector?eld visualization can now be addressed by a comprehensive array of methods,such as hedgehog and glyph plots,stream lines and sur-faces,topological decomposition,and texture-based methods.The last class of methods produces a“dense”,texture-like image repre-senting a?ow?eld,such as spot noise and line integral convolution (LIC).For a comprehensive survey of these methods,see[Hauser et al.2002].

Texture-based techniques have a number of attractive features. First,they produce a continuous representation of a?ow quantity which covers every dataset point.In comparison,discrete methods, such as streamlines and glyph plots,sample the datset,leaving to the user the interpolation of the visualization from the drawn sam-ples.Secondly,most texture methods relieve the user from the te-dious task of deciding where to place the data to be advected,e.g. the streamline seed points.Finally,recent texture-based methods employ graphics hardware,to render animated visualizations of the ?ow?eld at interactive rates,thereby using motion to effectively convey the impression of?ow.

?E-mail:{alext,vanwijk}@win.tue.nl

However effective and ef?cient in2D,texture-based techniques are not still extensively used for visualizing3D?ow.Indeed,3D ?ow visualization exhibits a number of problems,some of them re-lated to the use of texture-based techniques,others inherent to the extra spatial dimension.The main problem inherent to3D?ow vi-sualization is the occlusion by the depth dimension.This problem is even more obvious in case of dense visualizations,such as pro-duced by texture-based techniques,as compared to discrete meth-ods,such as streamlines.Another important problem of texture-based3D?ow visualizations is the dif?culty of quickly producing and rendering changing volumetric images that would convey the motion impression.

Recently,Image-Based Flow Visualization(IBFV)has been pro-posed for2D?ow?elds[van Wijk2001].Based on a combination of noise injection,advection,and decay,IBFV is able to produce insightful and accurate animated?ow textures at a very high frame rate,is simple to implement,and runs on consumer-grade graphics hardware.In this paper,we extend the IBFV technique and make it suitable to render3D vector?elds.In this extension,we keep IBFV’s main features:a high frame rate,implementation simplicity, and independence on specialized hardware.Moreover,we address the issue of depth occlusion in3D by extending the texture noise model the original IBFV introduces.Speci?cally,we add an opac-ity(or matter)noise to the gray value(or dye)noise already present in the IBVF.This gives a simple but powerful framework for tuning the visualization density without sacri?cing the overall contrast.

Section2overviews the existing texture-based visualization methods for3D?ow,with a focus on hardware-accelerated meth-ods.Section2.1presents the2D IBFV method we dwell upon.In Section3,we introduce the main concepts and the implementation of our method.Section4presents several results obtained with our method and discusses parameter settings.Finally,Section5draws the conclusions.

2R ELATED W ORK

As mentioned in Sec.1,a number of texture-based methods have been developed for visualizing3D?ow.In this section,we give a brief overview of these methods.We focus on the hardware-accelerated ones,as our aim is to produce animated3D?ow imagery at an interactive rate.

One of the?rst papers to address the dense visualization of3D vector data was published in1993by Craw?s et al.[1993].Here, splats are used,following the line integral convolution(LIC)tech-nique,to show the direction of the?eld.

More recently,in1999,Clyne and Dennis[1999]and Glau[1999]presented volume rendering techniques for time-dependent vector?elds which make use of parallel computing, respectively3D texture on SGI Onyx machines.In the same year, Rezk-Salama et al.[1999]present a method based on3D LIC textures.In this work,the impression of?ow is given by animating a precomputed LIC texture via cycling colors in the hardware color tables.Additionally,clipping surfaces can be interactively adjusted to specify the user’s volume of interest.The method achieves,on an SGI machine,20frames per second(fps)without clipping and3-4

fps when complex clipping surfaces are used.However effective,

the method can not interactively address time-dependent?elds,as

this would involve recomputing the LIC texture.Moreover,the

method uses OpenGL3D textures,which are not yet hardware

accelerated by consumer-grade graphics cards.

In contrast to the above,Weiskopf et al.propose a method

for rendering time-varying vector?elds as animated?ow tex-

tures[Weiskopf et al.2001].The principle is the same as for

IBFV,namely the method injects and advects a texture.However,

less attention is dedicated to the noise generation as in the IBFV

method[van Wijk2001].Special programmable per-pixel opera-

tions of the nVidia GeForce card family are used to offset,or ad-

vect,a texture image T I,as function of another texture T V that en-

codes the vector?eld.Speci?cally,the authors use the offset and dot

product texture shaders of the GeForce cards,as well as multitextur-

ing capabilities to combine several textures in a single pass.How-

ever,as the authors mention,the method would be applicable to3D

?elds only when the needed per-pixel operations are supported for

3D textures by the GeForce cards.For3D?elds,a similar method

is proposed that uses3D textures,per-pixel texture extensions of

SGI’s VPro graphics cards,and the SGI-speci?c post-?ltering bias

and scale image operations.Given this specialized hardware,the

method achieves4fps for an image of3202pixels of a3D?ow

dataset of1283cells.

The previous method has been extended one year later by Reck et

al.to handle3D curvilinear grids[Reck et al.2002].However,the

dye injection and advection process that drives the visualization re-

mains the same,which means that the method handles3D?elds only

using specialized SGI hardware.Given the extra overhead of han-

dling curvilinear grids,this method achieves only5fps for a?eld of

163cells,when an accelerating cell clustering technique that trades accuracy for speed is used.Without clustering,the method needs7

seconds per frame.

Visualizing3D?ow with dense imagery is dif?cult,as outlined

in Sec.1,due to the inherent occlusion problem.This problem is

addressed by Interrante and Grosch[1989].Essentially,a number

of strategies for computing effective LIC textures is given,such as

using region-of-interest(ROI)functions to limit the rendered vol-

ume,using sparse noise for the LIC to limit the volume?ll-in,using

3D halos to give a shadow effect to the LIC splats,and using ori-

ented fast LIC[Wegenkittl and Gr¨o ller1997]to convey directional

information by using assymetric?lter kernels.However,these tech-

niques trade speed for visual quality,and thus cannot generate inter-

active?ow animations.

2.12D IBFV

As described above,an essential limitation of3D texture-based?ow

visualizations is that they cannot be generated interactively,at least

not on consumer-grade graphics cards.In the next section,we

introduce our3D image-based?ow visualization method,or3D

IBFV for short,which addresses this issue.To better understand the

method,we?rst overview2D IBFV that serves as a basis for our

method.

Consider an unsteady2D vector?eld v(x,t)∈R2,de?ned for

t≥0and x∈?,where??R2is typically a rectangular region.

v represents typically a?ow?eld.However,other vector?elds can be considered too.

The trajectory of a massless particle in the?eld,or a pathline,is

the solution p(t)of the ordinary differential equation:

dp

=v(p(t),t),(1) given a start position p(0).Integrating the above equation by the Euler method gives the well known

p n+1=p n+v(p n,t)?t(2)

Take now a2D scalar property A(x,t)advected by the?ow,such as the color of advected bye.A will be,by de?nition,constant along pathlines de?ned by Eqn.1.Thus,in a?rst order approximation,we have

A(p n+1,n+1)=A(p n,n),if p n∈S,else0(3) However,one needs to initialize the dye advection by inserting dye into the?ow.For this,we take a convex combination of the advec-tion,as de?ned by Eqn.3,and the dye injection,de?ned by a scalar image G,as follows

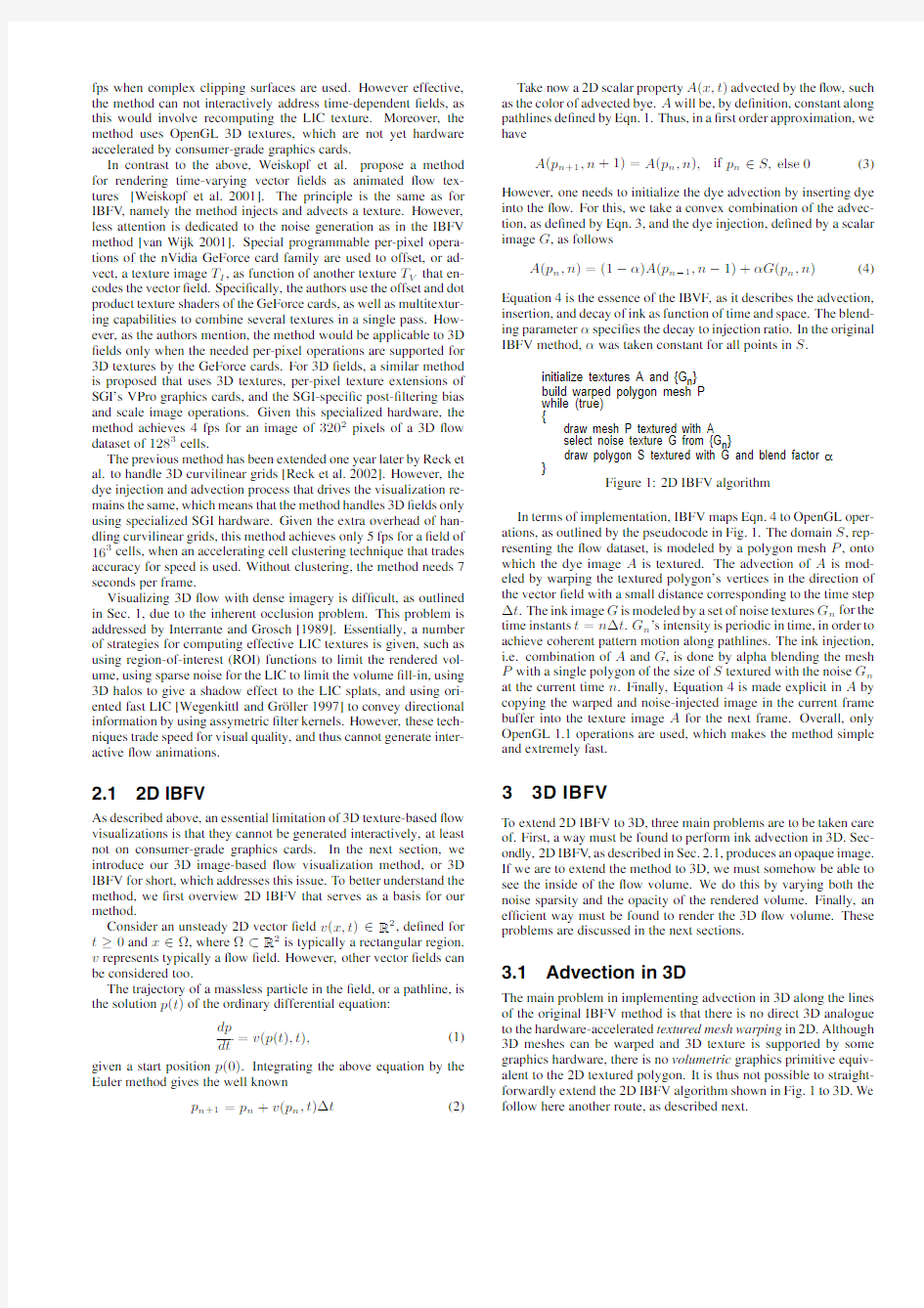

A(p n,n)=(1?α)A(p n?1,n?1)+αG(p n,n)(4) Equation4is the essence of the IBVF,as it describes the advection, insertion,and decay of ink as function of time and space.The blend-ing parameterαspeci?es the decay to injection ratio.In the original IBFV method,αwas taken constant for all points in S.

initialize textures A and {G n}

build warped polygon mesh P

while (true)

{

draw mesh P textured with A

select noise texture G from {G n}

draw polygon S textured with G and blend factor α

}

Figure1:2D IBFV algorithm

In terms of implementation,IBFV maps Eqn.4to OpenGL oper-ations,as outlined by the pseudocode in Fig.1.The domain S,rep-resenting the?ow dataset,is modeled by a polygon mesh P,onto which the dye image A is textured.The advection of A is mod-eled by warping the textured polygon’s vertices in the direction of the vector?eld with a small distance corresponding to the time step ?t.The ink image G is modeled by a set of noise textures G n for the time instants t=n?t.G n’s intensity is periodic in time,in order to achieve coherent pattern motion along pathlines.The ink injection, https://www.360docs.net/doc/77827389.html,bination of A and G,is done by alpha blending the mesh P with a single polygon of the size of S textured with the noise G n at the current time n.Finally,Equation4is made explicit in A by copying the warped and noise-injected image in the current frame buffer into the texture image A for the next frame.Overall,only OpenGL1.1operations are used,which makes the method simple and extremely fast.

33D IBFV

To extend2D IBFV to3D,three main problems are to be taken care of.First,a way must be found to perform ink advection in3D.Sec-ondly,2D IBFV,as described in Sec.2.1,produces an opaque image. If we are to extend the method to3D,we must somehow be able to see the inside of the?ow volume.We do this by varying both the noise sparsity and the opacity of the rendered volume.Finally,an ef?cient way must be found to render the3D?ow volume.These problems are discussed in the next sections.

3.1Advection in3D

The main problem in implementing advection in3D along the lines of the original IBFV method is that there is no direct3D analogue to the hardware-accelerated textured mesh warping in2D.Although 3D meshes can be warped and3D texture is supported by some graphics hardware,there is no volumetric graphics primitive equiv-alent to the2D textured polygon.It is thus not possible to straight-forwardly extend the2D IBFV algorithm shown in Fig.1to3D.We follow here another route,as described next.

We consider,for simplicity of the exposition,that the?ow vol-

ume is aligned with the coordinate axes and that it is discretized as

a regular grid p ijk=(i?x,j?y,k?z),i.e.the grid cells have all sizes?x,?y,and?z.We take now a2D planar slice S par-

allel to the XY plane,at distance k?z measured along the Z axis

(Fig.2a).Consider now a point p on slice S k and three consecu-

tive slices in the Z direction:S?at z=(k?1)?z;S;and S+at

z=(k+1)?z.If the advection time step?t used for integrating Eqn.1is small enough compared to the Z sampling distance?z and maximal Z velocity component max(v Z),i.e.if|v Z|?t0,the Z advection reaching S comes from S?.If v Z=0,there is no advection along the Z axis,so we subsume this case to any of the two former ones, e.g.consider the case v Z≥0.

a)b)

Figure2:a)Slicing the volume.b)Decomposing3D advection

Consider?rst the case v Z<0.With the above assumptions,the advection at the grid point p ijk comes from the point q=p ijk?v(p ijk)?t located between S and S+.To simplify notation,with-out loss of generality,assume that i=j=k=0and that v x<0and v y<0.The quantity A is advected by the?ow,so A(p000,t+?t)=A(q,t).We can evaluate A(q)by trilinear in-terpolation of the eight vertices of the cell containing q(see Fig.2b)

A(q)=(1?z)(1?y)(1?x)A000+z(1?y)(1?x)A001 +(1?z)y(1?x)A010+zy(1?x)A011

+(1?z)(1?y)xA100+z(1?y)xA101

+(1?z)yxA110+zyxA111(5)

where x,y,and z are the local cell coordinates of point q,i.e.x=?v x?t,y=?v y?t,and z=?v z?t,and A ijk are the values of A at the cell corner points p ijk.Denote by A k the sum of the terms in Eqn.5that have a factor(1?z),i.e.

A k=(1?y)(1?x)A000+y(1?x)A010

+(1?y)xA100+yxA110(6) and similarly by A k+1the sum of the terms that have a factor z

A k+1=(1?y)(1?x)A001+y(1?x)A011

+(1?y)xA101+yxA111(7) We can thus rewrite Eqn.5as

A?(p)=A(q)=(1?z)A k+zA k+1(8)where A?(p)is the advection at p if v Z(p)<0.The terms A k and A k+1allow a special interpretation.Indeed,A k is the2D advec-tion caused by v XY,the projection of v,onto the plane S,in the2D rectangular cell(p000,p100,p110,p010).

Similarly,A k+1is the planar2D advection caused by v XY in the 2D cell(p001,p101,p111,p011).Finally,Eqn.8denotes the advec-tion of A from point p001to point p000along the Z axis,i.e.in the ?eld v Z which is the projection of v onto Z.Recall that the above held for v Z<0.If v Z≥0,we deduce a similar relation to Eqn.8, i.e.

A+(p)=A(q)=(1?z)A k+zA k?1(9) where A+(p)is the advection at p if v Z(p)≥0.The only differ-ence here is that we consider the plane S?instead of S+,i.e.the Z advection brings information on the plane S from the opposite di-rection as in the former case v Z A(p)=A?(p)+A+(p)(10) Summarizing,the advection of the scalar quantity A from q to p in the?eld v can be decomposed in a series of2D advection processes in the planes S,S+,and S?,followed by a1D advection along the Z axis from S?to S for those points p having v Z(p)≥0and a 1D advection along the Z axis from S+to S for the points having v Z(p)<0. In the next section,the implementation of Eqn.10is presented. 3.2Advection implementation As explained in the previous section,we decompose the3D advec-tion of a scalar property A in a series of slice planar advections and a series of Z-axis aligned advections.Following this idea,the global 3D advection procedure for a single time step on a volume consist-ing of a set S i of slices,i=0..N?1,is given by the pseudocode in Fig.3: for i = 0 to N-1 { if (i>0) do 1D Z-axis advection from S i-1 to S i if (i do 1D Z-axis advection from S i+1 to S i do 2D IBFV-based advection in the slice S i } (1) (2) (3) Figure3:Advection procedure The planar advection terms of the type A XY in Eqns.8and9can be directly computed by applying the2D IBFV method consider-ing the projection v XY of v to the plane S(step3of the algorithm). Denote now by A k the value of A over all points of S k,for a given slice k.Similarly,denote by v Zk the velocity Z component over the points of S k.From Eqn.8,step1of the algorithm becomes(for?t taken to be1): A k:=(1?max(v Zk?1,0))A k+max(v Zk?1,0)A k?1(11) Similarly,step2becomes A k:=(1?max(?v Zk+1,0))A k+max(?v Zk+1,0)A k+1(12) In the above,the max function is used to consider the two cases v Z<0and v Z≥0explained in Sec.3.1.Note that we evalu-ate the component v Z in the slices S k?1and S k+1to perform the advection.Alternative schemes can be used,e.g.evaluate v Z as the average,or linear interpolation,of the velocity v Zk in the current plane S k and the velocities v Zk?1and v Zk+1in the planes S k?1 and S k+1.Similarly,the planar and Z-advections(steps1,2,3in Fig.3)can be done in different orders,leading to different integra-tion schemes.Given that we require|v Z|?t a)b)c) Figure4:Effect of operation order:a)test con?guration.b)correct advection.c)incorrect advection In the above scheme,the order of the planar and Z-advections is, however,important.Consider,for example,a laminar diagonal?ow (v x,v y,v z)=(1,0,1)and a property A(e.g.ink)nonzero in some area S0and zero elsewhere(Fig.4sketches this as seen along the Y axis).The advection should carry the’in?ow’value A from S0 diagonally along the slices S k,leading to the situation in Fig.4b. This is the result delivered by the algorithm in Fig.3.If,however, we did,for each slice,?rst the2D IBFV advection and then the Z-advection,to name one of the other possible orders,we would get the obviously wrong result in Fig.3c after one time step. Let us look closer at Eqns.11and12which describe the algo-rithm steps1and2.In essence,one performs a convex combination of the property A k(planar advection)over consecutive slices,us-ing the value z which represents the velocity Z component at grid points.As explained above,z is always greater or equal to zero.To generalize this for all points over a slice S k,i.e.for other points than mesh points,we bilinearly interpolate z over the slice S k from the z values at the mesh points. If we were able to implement the above Z advection using hard-ware acceleration,then the complete3D advection algorithm would be hardware accelerated,since the2D IBFV method obviously is so. The next section describes how this can be done. 3.3Hardware accelerated Z advection We start by evaluating,for all mesh points i,j of all slices S k,the quantities v+Zijk=max(v Zijk,0) v?Zijk=max(?v Zijk,0)(13) where v+Zijk and v?Zijk are the absolute values of the Z veloc-ity components in the direction,respectively in opposite direction of the Z axis,on slice S k.For simplicity of notation,we drop the indices i and j,https://www.360docs.net/doc/77827389.html,e v+Zk instead of v+Zijk,and similarly for v?Zk.For every slice k,we encode the above as two OpenGL2D luminance textures.All textures we use are named textures,as this ensures a fast access to texture data.The luminance textures store one component(luminance)per texel.The texture type(as passed to the OpenGL call glTexImage2D)is either GL INTENSITY8 or GL INTENSITY16.This gives8,respectively16bits resolu-tion for the velocity Z component.Since two separate textures are used for v+Zk and v?Zk,an effective range of9,respectively17 bits is used for the velocity Z component.For all our applications, 16bit textures have delivered good results,whereas the8bit reso-lution caused undersampling artifacts for vector?elds with a high Z value range.Note also that the framebuffer resolution,used to ac-cumulate the results,is also important for the overall computation accuracy.The spatial(X,Y)texture resolutions determine a trade-off between representation accuracy and memory use.As a simple rule,these textures shouldn’t be larger than the vector dataset’s XY resolution,since this is the complete Z velocity information to be encoded.Practically,resolutions of642and1282have given very good results. Now we can simply rewrite the Z advection equations(11) and(12)as A k:=(1?v+Zk?1)A k+v+Zk?1A k?1(14) A k:=(1?v?Zk+1)A k+v?Zk+1A k+1(15) where:=,in the above,denotes assignment.Similarly,we imple-ment the property A k as a set of2D RGBA textures,one for every slice plane S k.The XY resolution of the property textures is exactly analogous to the resolution of the single RG B texture used2D IBFV. Remark,however,that we need a texture alpha channel,whereas2D IBFV did not.The use of this channel is explained in Sec.3.5. glDisable(GL_BLEND); glBindTexture(GL_TEXTURE_2D,A[k-1]); drawQuad(); // A = A k-1 (draw previous slice) glEnable(GL_BLEND); glBlendFunc(GL_ZERO,GL_SRC_COLOR); glBindTexture(GL_TEXTURE_2D,v+z[k-1]); drawQuad(); // A = v+Zk-1A k-1 (modulate by v +Zk-1 ) glBindTexture(GL_TEXTURE_2D,temp); glCopyTexImage2D(GL_TEXTURE_2D,0,GL_RGBA,0,0,N,N,0); // temp = v+Zk-1A k-1 (save advection from previous slice) glDisable(GL_BLEND); glBindTexture(GL_TEXTURE_2D,A[k]); drawQuad(); // A = A k (draw current slice) glEnable(GL_BLEND); glBlendFunc(GL_ZERO,GL_ONE_MINUS_SRC_COLOR); glBindTexture(GL_TEXTURE_2D,v+z[k-1]); drawQuad(); // A = (1-v+Zk-1) A k (modulate by 1-v +Zk-1 ) glBlendFunc(GL_ONE,GL_ONE); glBindTexture(GL_TEXTURE_2D,temp); drawQuad(); // A = v+Zk-1A k-1 + (1-v+Zk-1) A k (add of previous slice) glBindTexture(GL_TEXTURE_2D,A[k]); glCopyTexImage2D(GL_TEXTURE_2D,0,GL_RGBA,0,0,N,N,0); // A k = A (save result in texture A k) Figure5:OpenGL code implementing Z advection The velocity-coding textures allow us to ef?ciently implement the above equations using graphics hardware.Equation14is implemented by the OpenGL code in Fig. 5.The OpenGL GL TEXTURE ENV MODE value passed to the glTexEnv function is always GL REPLACE,i.e.we do not use mesh vertex colors for the textures.All effects are obtained by varying the blending modes via the glBlendFunc function.A similar code is needed to imple-ment Eqn.15.Just as in the2D IBFV case,the textures are created with GL LINEAR values for the GL TEXTURE MIN FILTER and the GL TEXTURE MAX FILTER parameters of glTexParame-ter,to ensure bilinear interpolation.The function drawQuad draws a single textured quadrilateral that covers the whole im-age.The variable temp is one RGBA texture used as a temporary workspace.The viewing parameters are such that there is a one to one mapping of the slices S k to the viewport. Overall,our advection uses?ve textured quad drawing operations and two glCopyTexImage2D operations per slice and per advec-tion direction,done into P-buffers,for speed reasons.This has the extra advantage of not needing an on-screen window showing the inner working of the advection process. 3.4Noise injection:Preliminaries The last section has shown how3D advection can be implemented using hardware acceleration.The second important question is now which scalar property to advect.We follow2D IBFV,i.e.inject a spatially random,temporally periodic noise signal(see[van Wijk 2001]for a detailed analysis).In the following,denote by A n= (A In,Aαn)a2D image at moment t=n?t,consisting of an inten- sity component A In and an alpha(opacity)component Aαn.The original2D IBFV equation(4)becomes now,for every3D slice: A n=(1?α)A n?1+αG n(16) (we drop the spatial p n parameter for conciseness).Here,G n is a 2D texture modelling the noise injected at time step n,consisting of an intensity G In and an opacity Gαn component.Just as in the2D IBFV case,the noise should be periodical in time,as this creates the impression of color continuity along streamlines.In our3D case,we add an extra spatial dimension,i.e.the Z axis,to the noise.Just as for the temporal dimension,the noise must be a continuous,periodic signal along the Z axis,to minimize high frequency artifacts.For a full discussion hereof,see the original paper[van Wijk2001]. In2D IBFV,both Aαn and Gαn were identically one(i.e. opaque)everywhere.More precisely,the actual2D IBFV imple-mentation used RGB,and not RGBA,textures.Hence,if we directly implement Eqn.16in the3D case,we obtain a fully opaque?ow volume consisting of gray value ink.Although’correct’,this result is useless for visualizing the ?ow. a b d c Figure6:Noise injection:a)and b)2D IBFV noise mode.c)and d) 3D IBFV improved noise A?rst idea to tackle this problem is to use the texture alpha chan-nel to model transparency,i.e.to use a Gαn which is not identically 1,but a noise signal similar to G In.Texels with a low alpha would correspond to’transparent’noise,i.e.holes in the texture slices, through which one could see deeper into the?ow volume.Unfortu-nately,this method produces visualizations which have a poor con-trast and which,after a while,tend to?ll up the volume with texels having the same(average)transparency.When rendered by alpha compositing(see Sec.3.6),little is seen in the depth of the?ow.Fig-ure6a(see also Color Plate)shows this for a vortex?ow in which red ink has been injected to trace a streamline.Discarding lower alpha values by using OpenGL alpha testing improves the results somewhat.However,the red streamline is still not visible(Fig.6b). Parameter tuning can produce only mild improvements,as the following analysis shows.If anαclose to zero is used,in order to diminish the noise injection,then the’holes’in the noise have no chance to show up,and the visualization becomes quickly blurred. If a highαis used,to make the noise more prominent,then this will erase the current information(second term).The’ink decaying’ef-fect diminishes,and one sees only the noise variation in time.If one uses a sparse noise pattern,i.e.a Gαn which has mostly(very)low values,in conjunction with an average(0.5)to high(0.9..1)α,then the occlusion effect diminishes indeed.However,the method may now easily fall into the other extreme,where too little ink is injected, and the injected’holes’quickly erase it. Overall,we have found that it is very hard to get a parameter setting which produces a high-contrast visualization,both in terms of injected noise color and transparency.The visualization quickly tends to get blurred and produce low-contrast slices in the alpha channel.Although such visualizations do convey some insight into the?ow,due to their animated nature,we need a better solution for the contrast problem.This is described in the next section. 3.5Noise injection in3D The key to producing a high-contrast3D?ow visualization is to re-?ne Eqn16.The main problem of this model is that it is not able to express what to inject(i.e.ink or’holes’)and where to inject it in the volume independently.Instead of the original formulation,we propose to use A n=(1?H n)A n?1+H n G n(17) Here,we replace the constantαparameter from Eqn.16with a noise function H n.In other words,we use now two noise signals G n and H n instead of a single one.Both signals are periodic func-tions of Z position and time,as before,so they are stored as two sets of2D textures,two textures per Z slice.The noise signal H n is a single-channel alpha-texture.It describes where to inject the noise. If H n=1at some point,it means we inject the noise signal in G n at that point.If H n=0,we inject nothing at that point. The signal G n may be a luminance-alpha(LA)or RGBA sig-nal,depending whether we wish to inject gray,respectively colored noise.It describes what to inject at a given point,both in terms of the color(L or RGB)and the’hole’or’matter’injection(the A chan-nel).Although we have experimented with color noise of random hue,monochrome noise(whether gray or color)has given the best results. Using two noise signals instead of one allows us to specify what and where we inject independently.To inject fully transparent holes at a few locations,we use a G n having texels with a zero alpha and a H n sparsely populated with high values.In comparison with the model given by Eqn.16,we can now inject a fully transparent hole close to a fully opaque ink spot or a location where no injection at all takes place.Figure6c and d clearly show that this approach delivers a more transparent,but still highly contrasting visualization.Now the red streamline inside the?ow is clearly visible. We next describe the design of the G n and H n noise signals.Just as for the2D IBFV,we compute the noise H n at a moment t and spa-tial position x,y,and slice k,by using a periodic’transfer function’h,phase-shifted with t,from a start moment given by a white noise signal N: H(x,y,k,t)=h((N(x,y,k)+t)mod T)(18) where T=t N?t is the time period of t N different moments.The noise components G In and Gαn are computed analogously,using two more functions g I and gα.Note also that the phase offset(i.e. N signal)used to compute H is different than the one used for G.If h I a)b)c) Figure7:Transfer functions for a)H n,b)G In,and c)Gαn it were the same,the two noises G and H would be in phase,which would create visible artifacts. We choose the functions h,g I,and gαas follows(see also Fig.7). For h we propose h(t)= 0,t<τh t,t>τh(19) In other words,nothing is injected for t<τh,whereas strong injec-tion takes place for t>τh. For g I,we can use a similar function,controlled by a parameter τI.However,we found the step function g I(t)= 0,t<τI 1,t>τI(20) better.This would inject black ink for t<τI and white ink other-wise.Since the variable opacity already modulates the noise blend-ing,we found the above ink injection to be suf?cient,i.e.we didn’t make use of gray ink. Finally,for gαwe propose gα(t)= 0,t<τα t?τα 1?τα ,t>τα(21) Hence,holes are injected for t<τα.For t>τα,ink is injected, its color being given by g I.The above gαproduces asymmetric ad-vection patterns,thinner in the sense of the?ow and thicker in the opposite direction.This may serve as an indication of the?ow sense. Choosing gα(t)= 0,t<τα 1?t 1?τα ,t>τα(22) will reverse the orientation of the?ow patterns.For concrete set- tings for theτh,τI,andταparameters,and a summary of all pa- rameters,see Sec.4. The noise injection modelled by Eqn17can be ef?ciently imple- mented using graphics hardware.For this,we actually precompute and store the signals H n and Q n=G n H n as2D textures.As in the original2D IBFV,these textures may be smaller than the prop- erty textures A,to save memory and rendering time.Texture repeat and stretch operations are used to map the H and Q textures’size P Q N to the size A N of the textures A.Moreover,we store only textures for t N time instants and HQ ZN values of the Z coordinate (slice index k)and then repeat them periodically,both in Z direction and time(see Eqn.18).Normally,HQ ZN number of Z slices.Overall,we store thus HQ ZN t N pairs of H and Q textures. The noise injection algorithm for a given Z slice k is shown in Fig.8.Here,we denote the noises G and H at time step n in slice k by G kn and H kn respectively.As noise injection is done after the Z advection and2D IBFV steps,the drawable contains the signal A k when the injection starts.Recall also that,when alpha-textures are used in the GL REPLACE mode of glTexEnv,they only affect the glEnable(GL_BLEND); glBlendFunc(GL_ZERO,GL_ONE_MINUS_SRC_ALPHA); glBindTexture(GL_TEXTURE_2D,P[k][t]); drawQuad(); // A = (1?H k n) A k (clear areas where we inject something) glBlendFunc(GL_ONE,GL_ONE); glBindTexture(GL_TEXTURE_2D,Q[k][t]); drawQuad(); // A = (1?H kn) A k + H kn G kn(add ink and/or holes to the injection areas) Figure8:OpenGL code implementing noise injection destination alpha channel(e.g.step1of the code in Fig.8).Overall, the noise injection we propose uses just two textured quad draws,as compared to the original2D IBFV which used one similar operation. Putting it all together,we obtain the complete3D IBFV method, shown in pseudocode in Fig.9.The method has two phases,just for (k = 0; k { for (n=0; n precompute noise textures H kn and Q kn = G kn H kn build warped polygon mesh P k create texture A k } while(true) // execution { for(k = 0; k { clear drawable perform the Z advection from slice k?1 to k perform the Z advection from slice k+1 to k draw mesh P k textured with current drawable inject noise using textures Q kn and H kn copy drawable to texture A k } display results (draw textures A k back to front) } Figure9:Complete IBFV3D method like in the2D IBFV case(compare Fig.9with Fig.1).In the initial-ization phase,the noise and property textures(H kn,Q kn,and A k) as well as the warped polygon meshes P k are built for all Z slices k(and all time instants n,for the noise textures).In the execution phase,the method is structurally similar to the2D IBFV case,except for the new Z advection step. 3.6Rendering As the3D IBFV method is running,we visualize its results by draw-ing,in back to front order,the RGBA texture slices A k.Blending is enabled and the glBlendFunc function’s source and destina-tion factors are,as usually for this technique,set to GL SRC ALPHA and GL ONE MINUS SRC ALPHA.An important enhancement is obtained by enabling the OpenGL alpha test and cutting off all al-pha values below a givenαcut.Settingαcut between0.01and0.1 allows one to quickly’coarsen’the?ow volume by discarding the al-most transparent texels.Although not visible in separate slices,such texels can accumulate in the back to front blend and increase the overall opacity.Figure11shows a?ow volume rendered for three differentαcut values,from three viewpoints.Clearly,the higher αcut values allow more insight in the?ow. We should remark that the choice of the Z slicing direction is,so far,arbitrary.For a regular dataset,an ef?cient choice is to minimize the Z slices count,i.e.choose Z as the axis having the least cell count from the three axes.Visualizing the back-to-front rendered slices (Sec.3.6)will de?nitely produce poor results if the slicing direction is orthogonal to the line of sight,as we then tend to look through the slices.Still,for the various?ow volumes we visualized,this didn’t seem to be a major hindrance for the users.If desired,as usually done in many volume rendering applications,the method can detect this situation and change the slicing direction interactively,as the viewpoint changes. A considerably better rendering can be obtained if 3D textures are used.In this case,the 2D textures A k can be slices in a single 3D texture volume.The whole 3D IBFV method stays the same.How-ever,a visibly improved rendering can be done by drawing,back to front,a number of 2D polygons parallel to the viewport,that use the 3D texture.This is exactly what most volume rendering meth-ods do.Remark that the use of 3D texture is,in this case,strictly needed for rendering the 3D IBFV results,not for producing them.Our main problem here was that (reasonably large)3D textures are seldom present with hardware acceleration in the graphics cards we availed of (GeForce 2and 3). a b d e c Figure 10:Parameter settings for 3D IBFV 4D ISCUSSION We shall discuss now the parameter settings and the method’s per-formance and memory requirements. Compared to 2D IBFV ,the 3D method introduces several new pa-rameters.Here follows the complete parameter list of 3D IBVF (see Sec.3.5for details): ?A N :resolution of the (4bytes,RGBA)property textures A k ?Z N :number of Z slices ?v N :resolution of the velocity (2byte,alpha)textures ?HQ N :resolution of textures H (1byte,luminance)and Q (2bytes,luminance alpha)?HQ ZN :Z resolution of the noise textures H and Q ?t N :time resolution of noise textures ?X N ,Y N :resolution of meshes (12bytes per vertex)?τh :noise injection strength (see Sec.3.5)?τα:ink to hole injection ratio ?τI :black to white noise ink ratio ?αcut :alpha test threshold The visualization is strongly affected by the last four parameters (explained in Sec.3.5).τh controls how much noise,consisting of holes and/or ink,is injected.Good values for τh range from 0.01to 0.1.Higher values tend to produce too noisy images.ταis the ink to hole injection ratio.If close to 1,holes are injected,i.e.matter is ’carved out’of the ?ow volume.If close to 0,ink is injected.Good values for ταare 0.9or higher,in order to produce a sparse ?ow vol-ume.τI controls the ink luminance.If close to 0,more white ink is used.If close to 1,more black ink is used.Good values range around 0.1,to favor white ink. As for the 2D IBFV ,we can inject ink at chosen locations to trace streamlines.Figure 10shows a helix ?ow in which red ink was in-jected close to the back plane’s center.Besides color,we set the ink’s alpha to 1(opaque).Using a high αcut discards most of the noise but keeps the opaque ink (Fig.10d).Another idea is to set,for the noise injected close to the in?ow’s center,a high alpha.This alpha is advected into the helix core,which becomes opaque.Next,we set a high αcut and discard all outside the core (Fig.10b).If low alpha noise is used instead,we ’carve out’the core (Fig.10c).Another effective option is to inject strong noise for a few steps (Fig.10a)and then turn it off by setting τP to zero.This creates a more pleasant,smoother visualization (Fig.10e).This technique was used also for the ?ow in Fig.11. As 2D IBFV ,we are aware that our 3D method is limited in the range of velocities it can display.Speci?cally,both the maximum values for the XY and Z velocities must be smaller than the XY and Z cell sizes divided by the time step.In practice,we handle this by either using a small time step or by clamping the higher velocities.We consider now the total memory (in bytes)our method needs to store the textures and polygon meshes: M =2Z N (6X N Y N +2A 2N +2v 2 N )+3HQ ZN HQ N t N (23) Given the settings:A N =512,X N =Y N =Z N =50,v N =HQ N =HQ ZN =64,and t N =32,we obtain M =59MBytes.This just ?ts into our 64MB GeForce cards.If M exceeds the graph-ics card memory,transfer to the normal memory takes place,which severely degrades the rendering performance. The rendering time is,as expected,proportional with the texture sizes,the number of slices Z N ,and the mesh resolution X N Y N .For the GeForce 2and GeForce 3Ti 400cards,the mesh resolu-tion X N Y N was by far the dominant factor in the rendering time.This was much less severe for the standard GeForce 3cards,where the dominant factor seems to be the texture resolution A N .A few rendering timings are shown in Fig.12.We preferred using smaller Z N than X N and Y N values as this delivered higher performance,as expected.Besides X N ,Y N ,and A N ,all con?gurations use the settings described for the memory estimation above,and run on a Pentium III PC at 800MHz with Windows 2000.Given that the 2D IBFV produced 60frames per second on the same hardware and that we render 50Z slices,3D IBFV delivers,so to speak,more through-put per rendered slice. X res =Y res 20 30 40 60 80GeForce 3 Ti 9.0 4.5 3 1.6 0.9GeForce 3 3.0 2.9 2.9 2.4 2.2 11.0 10.8 10.6 10.4 9.0 10.2 6.3 3.9 2.2 1.2A res 512512256256 Figure 12:3D IBFV timings,frames per second Another discussion point is the usage of the velocity-encoding lu-minance textures (Sec.3.3).An alternative approach would be to encode the Z velocities as 2D mesh vertex colors.In this case,the Z advection would be done by drawing the textured mesh with the GL MODULATE mode of the glTexEnv OpenGL function,instead of blending two textures,as we do now.This approach (which we Figure 11:3D ?ow rendered with αcut =0.01(top),αcut =0.02(middle),and αcut =0.05(bottom) tried ?rst)has several disadvantages.First,storing a N 2quad mesh is much more expensive than storing a N 2luminance texture.Even if mesh coordinates are somehow shared,we must still store a full RGBA value per vertex,as OpenGL 1.1does not allow storing only vertex luminance https://www.360docs.net/doc/77827389.html,ing velocity textures,we store just the luminance values.Secondly,we found out that,on several nVidia GeForce 2and 3cards,drawing a single quad with a N 2texture is much faster than drawing an N 2quad mesh with vertex colors.Note,however,that the two approaches are functionally identical. 5C ONCLUSIONS 3D IBFV is a method for visualizing 3D ?uid ?ow as moving tex-ture patterns using consumer graphics hardware.As its 2D counter-part,3D IBFV offers a framework to create several ?ow visualiza-tions (stream ’tubes’,LIC-like patterns,etc),high frame rates,and a simple OpenGL 1.1implementation,without 3D textures.How-ever,while 2D IBFV easily handles instationary ?elds,3D IBFV currently handles only the time independent case,as instationary ?elds require a continuous update of the velocity textures.3D IBFV produces higher frame rates on less specialized hardware than other 3D ?ow visualization methods.We extend the 2D IBFV noise con-cept by adding ’opacity noise’.Combined with alpha testing,we get a simple and interactive way to examine ?ow volumes in the depth dimension.We present the implementation and parameter settings in detail,so that one can readily apply it.We see several extensions of 3D IBFV .New noise and/or ink injection designs can produce 3D ?ow domain decompositions,stream surfaces,curved arrow plots,and many other visualizations.Secondly,using 3D texture,as these become more widely available,may lead to a simpler,more accurate 3D IBFV .Finally,using DirectX ?oating-point textures may provide better accuracy.We plan to investigate these options in the near fu-ture. R EFERENCES C LYNE ,J.,AN D D ENNIS ,J.1999.Interactive direct volume rendering of time-varying data.Proc.IEE E VisSym ’99,eds.E.Gr¨o ller,H.Lof-felmann,W.Ribarsky,Springer,pp.109-120.C RAWFIS ,R.,M AX ,N.,B ECKER ,B.,AND C ABRAL ,B.1993.V olume rendering of scalar and vector ?elds at llnl.Proc.IEEE Supercomputing ’93,IEEE CS Press,pp.570-576.G LAU ,T.1999.Exploring instationary ?uid ?ows by interactive volume movies.Proc.IEEE VisSym ’99,eds.E.Gr¨o ller,H.Loffelmann,W.Rib-arsky,Springer,pp.277-284.H AUSER ,H.,L ARAMEE ,R.S.,AND D OLEISCH ,H.2002.State-of-the-art report 2002in ?ow visualization.Tech.Report TR-VRVis-2002-003,VRVis Research Center,Vienna,Austria .I NTERRANTE ,V.,AND G ROSCH ,C.1989.Visualizing 3d ?ow.IEEE Computer Graphics and Applications,18(4),1998,pp.49-52.R ECK ,F.,R EZK -S ALAMA ,C.,G ROSSO ,R.,AND G REINER ,G.2002.Hardware accelerated visualization of curvilinear vector ?elds.Proc.VMV ’02,eds.T.Ertl,B.Girod,G.Greiner,H.Niemann,H.P .Seidel,Stuttgart,pp.228-235.R EZK -S ALAMA ,C.,H ASTREITER ,P.,C HRISTIAN ,T.,AND E RTL ,T.1999.Interactive exploration of volume line integral convolution based on 3d texture mapping.Proc.IEEE Visualization ’99,eds.D.Ebert,M.Gross,B.Hamann,IEEE CS Press,pp.233-240. VAN W IJK ,J.J.2001.Image based ?ow https://www.360docs.net/doc/77827389.html,puter Graphics (Proc.SIGGRAPH ’01),ACM Press,pp.263-279. W EGENKITTL ,R.,AND G R ¨OLLER ,E.1997.Fast oriented line integral convolution for vector ?eld visualization via the internet.Proc.IEEE Vi-sualization ’97,IEEE CS Press,pp.119-126. W EISKOPF ,D.,H OPF ,M.,AND E RTL ,T.2001.Hardware-accelerated visualization of time-varying 2d and 3d vector ?elds by texture advection via programmable per-pixel operations.Proc.VMV ’01,eds.T.Ertl,B.Girod,G.Greiner,H.Niemann,H.P .Seidel,Stuttgart,pp.439-446. “3D打印与激光再制造技术”学习心得 张向军 一、对3D打印技术的基本认知 3D打印就是将计算机中设计的三维模型分层,得到许多二维平面图形,再利用各种材质的粉末或熔融的塑料或其它材料逐层打印这些二维图形,堆叠成为三维实体。作为3D打印技术包括了三维模型的建模、机械及其自动控制(机电一体化)、模型分层并转化为打印指令代码软件等技术。 不远的将来,我们完全可以用电脑把自己想要的东西设计出来,然后进行三维打印,就像我们现在可以在线编辑文档一样。通过电子设计文件或设计蓝图,3D打印技术将会把数字信息转化为实体物品。当然,这还不是3D打印的全部,3D打印最具魔力的地方是,它将给材料科学、生物科学带来翻天覆地的变化,最终的结果是科学技术和创新呈现爆发式的变革。 3D打印最直接的效益,就是对材料行业的拉动,随着3D产业规模的扩大,必将推动材料行业的不断变革。 3D打印机本是一种相对粗陋的机器,从工厂诞生,然后走进了家庭、企业、学校、厨房、医院、甚至时尚T台。但是一旦将3D打印机与当今发达的数字技术相结合,奇迹就发生了。再加上互联网的普及以及微小而成本低廉的电子电路的广泛使用,在材料科学和生物技术取得日新月异进步的今天,3D打印应用前景简直令人不可想象! 二、3D打印的应用前景 有了3D激光扫描仪,我们可以从市场上采购造型优美的后配重成品,采用逆向工程技术进行3D扫描后,适当修型再转化为3D图纸为我所用。修改后配重也可用3D打印机做出样品,这种3D模型外观上、结构上与设计实体完全一致, 非常直观。 三、3D打印技术应用的局限性 3D打印结合了智能制造技术、自动控制技术和新材料技术,确实具备了引领第三次工业革命的有利条件,但是3D打印技术的大规模工业化应用还存在诸多限制。首先3D打印的材料品种单一,价格也过于昂贵,用于打印样品则可,用于批量生产则经济上决不可行。其次从效率上来说,采用3D打印技术工艺生产一个零件还是过于浪费时间了。其三,3D打印零部件的内在质量可靠性仍然存疑。采用金属粉末加上激光烧结和激光熔覆技术打印的零部件,其内在金属结构与采用现代冶金技术生产的零部件,在组织的致密性、结合的紧密性方面差距很大,影响3D打印零部件的结构强度。因此3D打印技术现阶段真正的实际应用,仅仅在产品研发过程的样品制造、工业生产中的砂型制造、生物医学上的器官仿生等方面较为成熟。那些3D技术打印的裙子、鞋子不过是艺术家自娱自乐的哗众取宠罢了。至于劳心费力的捣鼓出一个3D打印的水果,除了追求标新立异之外没有任何现实意义! 四、对我国发展3D产业技术的几点思考 3D技术产业化发展有其局限性,存在诸多急需突破的技术瓶颈,能否真正引领第三次工业革命浪潮尚无定论。但3D 打印毕竟是代表制造技术发展方向的前沿技术,从国家层面还是要提前谋划、提前布局、紧密跟踪。首先,要从小学阶段开始在学校普及3D打印知识教育,让孩子们从小接触3D打印技术,有利于培养他们的动手能力和创新精神。桌面级3D打印机价格已经非常便宜,完全具备全面推广条件。其次,着力培养大型3D打印和扫描技术企业,组建3D产业技术国家队,壮大企业实力,参与国际竞争。目前我国3D制造企业规模偏小,实力不强,大多数在破产的边缘苦苦挣扎,很难与国外的大公司展开真正意义上的 商务公文写作目录 一、商务公文的基本知识 二、应把握的事项与原则 三、常用商务公文写作要点 四、常见错误与问题 一、商务公文的基本知识 1、商务公文的概念与意义 商务公文是商业事务中的公务文书,是企业在生产经营管理活动中产生的,按照严格的、既定的生效程序和规范的格式而制定的具有传递信息和记录作用的载体。规范严谨的商务文书,不仅是贯彻企业执行力的重要保障,而且已经成为现代企业管理的基础中不可或缺的内容。商务公文的水平也是反映企业形象的一个窗口,商务公文的写作能力常成为评价员工职业素质的重要尺度之一。 2、商务公文分类:(1)根据形成和作用的商务活动领域,可分为通用公文和专用公文两类(2)根据内容涉及秘密的程度,可分为对外公开、限国内公开、内部使用、秘密、机密、绝密六类(3)根据行文方向,可分为上行文、下行文、平行文三类(4)根据内容的性质,可分为规范性、指导性、公布性、陈述呈请性、商洽性、证明性公文(5)根据处理时限的要求,可分为平件、急件、特急件三类(6)根据来源,在一个部门内部可分为收文、发文两类。 3、常用商务公文: (1)公务信息:包括通知、通报、通告、会议纪要、会议记录等 (2)上下沟通:包括请示、报告、公函、批复、意见等 (3)建规立矩:包括企业各类管理规章制度、决定、命令、任命等; (4)包容大事小情:包括简报、调查报告、计划、总结、述职报告等; (5)对外宣传:礼仪类应用文、领导演讲稿、邀请函等; (6)财经类:经济合同、委托授权书等; (7)其他:电子邮件、便条、单据类(借条、欠条、领条、收条)等。 考虑到在座的主要岗位,本次讲座涉及请示、报告、函、计划、总结、规章制度的写作,重点谈述职报告的写作。 4、商务公文的特点: (1)制作者是商务组织。(2)具有特定效力,用于处理商务。 (3)具有规范的结构和格式,而不像私人文件靠“约定俗成”的格式。商务公文区别于其它文章的主要特点是具有法定效力与规范格式的文件。 5、商务公文的四个构成要素: (1)意图:主观上要达到的目标 (2)结构:有效划分层次和段落,巧设过渡和照应 (3)材料:组织材料要注意多、细、精、严 (4) 正确使用专业术语、熟语、流行语等词语,适当运用模糊语言、模态词语与古词语。 6、基本文体与结构 商务文体区别于其他文体的特殊属性主要有直接应用性、全面真实性、结构格式的规范性。其特征表现为:被强制性规定采用白话文形式,兼用议论、说明、叙述三种基本表达方法。商务公文的基本组成部分有:标题、正文、作者、日期、印章或签署、主题词。其它组成部分有文头、发文字号、签发人、保密等级、紧急程度、主送机关、附件及其标记、抄送机关、注释、印发说明等。印章或签署均为证实公文作者合法性、真实性及公文效力的标志。 7、稿本 (1)草稿。常有“讨论稿”“征求意见稿”“送审稿”“草稿”“初稿”“二稿”“三稿”等标记。(2)定稿。是制作公文正本的标准依据。有法定的生效标志(签发等)。(3)正本。格式正规并有印章或签署等表明真实性、权威性、有效性。(4)试行本。在试验期间具有正式公文的法定效力。(5)暂行本。在规定 D 打 印 实 践 报 告 班级:工设一班 学号:311317040124 姓名:胡明亮 3d打印结课总结 我在网络上查阅到3D打印是快速成型技术的一种,它是一种以数字模型文件为基础,运用粉末状金属或塑料等可粘合材料,通过逐层打印的方式来构造物体的技术。3D打印通常是采用数字技术材料打印机来实现的。常在模具制造、工业设计等领域被用于制造模型,后逐渐用于一些产品的直接制造,已经有使用这种技术打印而成的零部件。该技术在珠宝、鞋类、工业设计、建筑、工程和施工(AEC)、汽车,航空航天、牙科和医疗产业、教育、地理信息系统、土木工程、枪支以及其他领域都有所应用。3D打印存在着许多不同的技术。它们的不同之处在于以可用的材料的方式,并以不同层构建创建部件。3D打印常用材料有尼龙玻纤、耐用性尼龙材料、石膏材料、铝材料、钛合金、不锈钢、镀银、镀金、橡胶类材料。 3d打印技术的出现给工业设计的发展带来了新的契机,以往我们使用手绘、计算机渲染等方式来表达设计造型,这些方式虽然直观但并没有实体模型来的生动。同时3d打印的出现使得原始的设计流程变得精简,使设计师能够专注于产品形态创意和功能创新,即设计即生产。产品的造型设计向着多样化方向发展,由于3d打印的出现某些产品的制造出现了转机。通俗地说,3D打印机是可以“打印”出真实的3D物体的一种设备,比如打印一个机器人、打印玩具车,打印各种模型,甚至是食物等等。之所以通俗地称其为“打印机”是参照了普通打印机的技术原理,因为分层加工的过程与喷墨打印十分相似。这项打印技术称 为3D立体打印技术。 在实习中我了解到了打印的基本原理和步骤,首先在电脑中安装打印机驱动程序,然后导入模型图调试机器,开始打印,打印过程中打印机将材料加热融化形成流体,最后流体经过导入的程序控制在底盘上一层一层的形成模型。也就是说日常生活中使用的普通打印机可以打印电脑设计的平面物品,而所谓的3D打印机与普通打印机工作原理基本相同,只是打印材料有些不同,普通打印机的打印材料是墨水和纸张,而3D打印机内装有金属、陶瓷、塑料、砂等不同的“打印材料”,是实实在在的原材料,打印机与电脑连接后,通过电脑控制可以把“打印材料”一层层叠加起来,最终把计算机上的蓝图变成实物。 我们使用的都是桌面级的打印机,打印出模型的分辨率和大小都有很大的限制。我在新闻上了解到几年前就有打印出的金属手枪,无人飞机等问世了,前年我国也有“土豪金”汽车问世,这些都说明3d打印的前景十分广阔,将来能够应用到医疗、军事、建筑、航天等各个领域。但就目前来看3d打印的发展还有很长一段路要走,首先材料就是一个大问题,3D打印的产品只能看不能用,因为这些产品上不能加上电子元器件,无法为电子产品量产。3D打印即使不生产电子产品,但受材料的限制,可以生产的其他产品也很少,即使生产出来的产品,也无法量产,而且一摔就碎。在这方面我也一些体会,因为我们所使用的打印机材料就受到一定限制。同时,3d打印如果真的发展下去也会收到各个方面的制约,比如日本的一个青年就因为打印枪支而被起诉,更重要的是知识产权将会收到更大的冲击,如今的中国山寨抄袭横行,更何况3d打印普及以后呢。 在3d打印实习中也出现了一些问题,比如打印前的设置参数有问题导致模型在打印过程中坍塌,结束时取下底座不小心导致模型损坏等…但总的来说我还是收获了很多,熟悉了基本的打印机操作步骤,了解了打印原理和注意事项,这为我以后打印自己的设计积累了丰富的经验。 关于会议纪要的规范格式和写作要求 一、会议纪要的概念 会议纪要是一种记载和传达会议基本情况或主要精神、议定事项等内容的规定性公文。是在会议记录的基础上,对会议的主要内容及议定的事项,经过摘要整理的、需要贯彻执行或公布于报刊的具有纪实性和指导性的文件。 会议纪要根据适用范围、内容和作用,分为三种类型: 1、办公会议纪要(也指日常行政工作类会议纪要),主要用于单位开会讨论研究问题,商定决议事项,安排布置工作,为开展工作提供指导和依据。如,xx学校工作会议纪要、部长办公会议纪要、市委常委会议纪要。 2、专项会议纪要(也指协商交流性会议纪要),主要用于各类交流会、研讨会、座谈会等会议纪要,目的是听取情况、传递信息、研讨问题、启发工作等。如,xx县脱贫致富工作座谈会议纪要。 3、代表会议纪要(也指程序类会议纪要)。它侧重于记录会议议程和通过的决议,以及今后工作的建议。如《××省第一次盲人聋哑人代表会议纪要》、《xx市第x次代表大会会议纪要》。 另外,还有工作汇报、交流会,部门之间的联席会等方面的纪要,但基本上都系日常工作类的会议纪要。 二、会议纪要的格式 会议纪要通常由标题、正文、结尾三部分构成。 1、标题有三种方式:一是会议名称加纪要,如《全国农村工作会议纪要》;二是召开会议的机关加内容加纪要,也可简化为机关加纪要,如《省经贸委关于企业扭亏会议纪要》、《xx组织部部长办公会议纪要》;三是正副标题相结合,如《维护财政制度加强经济管理——在xx部门xx座谈会上的发言纪要》。 会议纪要应在标题的下方标注成文日期,位置居中,并用括号括起。作为文件下发的会议纪要应在版头部分标注文号,行文单位和成文日期在文末落款(加盖印章)。 2、会议纪要正文一般由两部分组成。 (1)开头,主要指会议概况,包括会议时间、地点、名称、主持人,与会人员,基本议程。 (2)主体,主要指会议的精神和议定事项。常务会、办公会、日常工作例会的纪要,一般包括会议内容、议定事项,有的还可概述议定事项的意义。工作会议、专业会议和座谈会的纪要,往往还要写出经验、做法、今后工作的意见、措施和要求。 (3)结尾,主要是对会议的总结、发言评价和主持人的要求或发出的号召、提出的要求等。一般会议纪要不需要写结束语,主体部分写完就结束。 三、会议纪要的写法 根据会议性质、规模、议题等不同,正文部分大致可以有以下几种写法: 1、集中概述法(综合式)。这种写法是把会议的基本情况,讨 titlesec&titletoc中文文档 张海军编译 makeday1984@https://www.360docs.net/doc/77827389.html, 2009年10月 目录 1简介,1 2titlesec基本功能,2 2.1.格式,2.—2.2.间隔, 3.—2.3.工具,3. 3titlesec用法进阶,3 3.1.标题格式,3.—3.2.标题间距, 4.—3.3.与间隔相关的工具, 5.—3.4.标题 填充,5.—3.5.页面类型,6.—3.6.断行,6. 4titletoc部分,6 4.1.titletoc快速上手,6. 1简介 The titlesec and titletoc宏包是用来改变L A T E X中默认标题和目录样式的,可以提供当前L A T E X中没有的功能。Piet van Oostrum写的fancyhdr宏包、Rowland McDonnell的sectsty宏包以及Peter Wilson的tocloft宏包用法更容易些;如果希望用法简单的朋友,可以考虑使用它们。 要想正确使用titlesec宏包,首先要明白L A T E X中标题的构成,一个完整的标题是由标签+间隔+标题内容构成的。比如: 1.这是一个标题,此标题中 1.就是这个标题的标签,这是一个标签是此标题的内容,它们之间的间距就是间隔了。 1 2titlesec基本功能 改变标题样式最容易的方法就是用几向个命令和一系列选项。如果你感觉用这种方法已经能满足你的需求,就不要读除本节之外的其它章节了1。 2.1格式 格式里用三组选项来控制字体的簇、大小以及对齐方法。没有必要设置每一个选项,因为有些选项已经有默认值了。 rm s f t t md b f up i t s l s c 用来控制字体的族和形状2,默认是bf,详情见表1。 项目意义备注(相当于) rm roman字体\textrm{...} sf sans serif字体\textsf{...} tt typewriter字体\texttt{...} md mdseries(中等粗体)\textmd{...} bf bfseries(粗体)\textbf{...} up直立字体\textup{...} it italic字体\textit{...} sl slanted字体\textsl{...} sc小号大写字母\textsc{...} 表1:字体族、形状选项 bf和md属于控制字体形状,其余均是切换字体族的。 b i g medium s m a l l t i n y(大、中、小、很小) 用来标题字体的大小,默认是big。 1这句话是宏包作者说的,不过我感觉大多情况下,是不能满足需要的,特别是中文排版,英文 可能会好些! 2L A T E X中的字体有5种属性:编码、族、形状、系列和尺寸。 2 “3D打印与激光再制造技术”学习心得 一、对3D打印技术的基本认知 3D打印就是将计算机中设计的三维模型分层,得到许多二维平面图形,再利用各种材质的粉末或熔融的塑料或其它材料逐层打印这些二维图形,堆叠成为三维实体。作为3D打印技术包括了三维模型的建模、机械及其自动控制(机电一体化)、模型分层并转化为打印指令代码软件等技术。 不远的将来,我们完全可以用电脑把自己想要的东西设计出来,然后进行三维打印,就像我们现在可以在线编辑文档一样。通过电子设计文件或设计蓝图,3D打印技术将会把数字信息转化为实体物品。当然,这还不是3D打印的全部,3D打印最具魔力的地方是,它将给材料科学、生物科学带来翻天覆地的变化,最终的结果是科学技术和创新呈现爆发式的变革。 3D打印最直接的效益,就是对材料行业的拉动,随着3D产业规模的扩大,必将推动材料行业的不断变革。 3D打印机本是一种相对粗陋的机器,从工厂诞生,然后走进了家庭、企业、学校、厨房、医院、甚至时尚T台。但是一旦将3D打印机与当今发达的数字技术相结合,奇迹就发生了。再加上互联网的普及以及微小而成本低廉的电子电路的广泛使用,在材料科学和生物技术取得日新月异进步的今天,3D打印应用前景简直令人不可想象! 二、3D打印在我公司的应用前景 我们*****公司是一家叉车制造企业,每年都会进行不间断的新产 品研发。在这个过程中,3D扫描和3D打印技术有着良好的应用前景。譬如叉车的后配重由众多复杂曲面连接而成,设计上极为困难。有了3D激光扫描仪,我们可以从市场上采购造型优美的后配重成品,采用逆向工程技术进行3D扫描后,适当修型再转化为3D图纸为我所用。修改后配重也可用3D打印机做出样品,供领导决策时使用。这种3D模型外观上、结构上与设计实体完全一致,非常直观,有助于公司领导快速决策。因此3D打印技术的成功应用,必将加快我公司产品研发进度,缩短产品研发周期,提高市场响应能力! 三、3D打印技术应用的局限性 3D打印结合了智能制造技术、自动控制技术和新材料技术,确实具备了引领第三次工业革命的有利条件,但是3D打印技术的大规模工业化应用还存在诸多限制。首先3D打印的材料品种单一,价格也过于昂贵,用于打印样品则可,用于批量生产则经济上决不可行;其次从效率上来说,采用3D打印技术工艺生产一个零件还是过于浪费时间了;其三,3D打印零部件的内在质量可靠性仍然存疑。采用金属粉末加上激光烧结和激光熔覆技术打印的零部件,其内在金属结构与采用现代冶金技术生产的零部件,在组织的致密性、结合的紧密性方面差距很大,影响3D打印零部件的结构强度。因此3D打印技术现阶段真正的实际应用,仅仅在产品研发过程的样品制造、工业生产中的砂型制造、生物医学上的器官仿生等方面较为成熟。那些3D 技术打印的裙子、鞋子不过是艺术家自娱自乐的哗众取宠罢了。至于劳心费力的捣鼓出一个3D打印的水果,除了追求标新立异之外没有 毕业论文写作要求与格式规范 关于《毕业论文写作要求与格式规范》,是我们特意为大家整理的,希望对大家有所帮助。 (一)文体 毕业论文文体类型一般分为:试验论文、专题论文、调查报告、文献综述、个案评述、计算设计等。学生根据自己的实际情况,可以选择适合的文体写作。 (二)文风 符合科研论文写作的基本要求:科学性、创造性、逻辑性、 实用性、可读性、规范性等。写作态度要严肃认真,论证主题应有一定理论或应用价值;立论应科学正确,论据应充实可靠,结构层次应清晰合理,推理论证应逻辑严密。行文应简练,文笔应通顺,文字应朴实,撰写应规范,要求使用科研论文特有的科学语言。 (三)论文结构与排列顺序 毕业论文,一般由封面、独创性声明及版权授权书、摘要、目录、正文、后记、参考文献、附录等部分组成并按前后顺序排列。 1.封面:毕业论文(设计)封面具体要求如下: (1)论文题目应能概括论文的主要内容,切题、简洁,不超过30字,可分两行排列; (2)层次:大学本科、大学专科 (3)专业名称:机电一体化技术、计算机应用技术、计算机网络技术、数控技术、模具设计与制造、电子信息、电脑艺术设计、会计电算化、商务英语、市场营销、电子商务、生物技术应用、设施农业技术、园林工程技术、中草药栽培技术和畜牧兽医等专业,应按照标准表述填写; (4)日期:毕业论文(设计)完成时间。 2.独创性声明和关于论文使用授权的说明:需要学生本人签字。 3.摘要:论文摘要的字数一般为300字左右。摘要是对论文的内容不加注释和评论的简短陈述,是文章内容的高度概括。主要内容包括:该项研究工作的内容、目的及其重要性;所使用的实验方法;总结研究成果,突出作者的新见解;研究结论及其意义。摘要中不列举例证,不描述研究过程,不做自我评价。 3D打印与激光再制造技术”学习心得 向军 一、对3D打印技术的基本认知 D打印就是将计算机中设计的三维模型分层,得到许多二维平面图形,再利用各种材质的粉末或熔融的塑料或其它材料逐层打印这些二维图形,堆叠成为三维实体。作为3D打印技术包括了三维模型的建模、机械及其自动控制(机电一体化)、模型分层并转化为打印指令代码软件等技术。 远的将来,我们完全可以用电脑把自己想要的东西设计出来,然后进行三维打印,就像我们现在可以在线编辑文档一样。通过电子设计文件或设计蓝图,3D打印技术将会把数字信息转化为实体物品。当然,这还不是3D打印的全部,3D打印最具魔力的地方是,它将给材料科学、生物科学带来翻天覆地的变化,最终的结果是科学技术和创新呈现爆发式的变革。 D打印最直接的效益,就是对材料行业的拉动,随着3D产业规模的扩大,必将推动材料行业的不断变革。 D打印机本是一种相对粗陋的机器,从工厂诞生,然后走进了家庭、企业、学校、厨房、医院、甚至时尚T台。但是一旦将3D打印机与当今发达的数字技术相结合,奇迹就发生了。再加上互联网的普及以及微小而成本低廉的电子电路的广泛使用,在材料科学和生物技术取得日新月异进步的今天,3D打印应用前景简直令人不可想象! 二、3D打印的应用前景 了3D激光扫描仪,我们可以从市场上采购造型优美的后配重成品,采用逆向工程技术进行3D扫描后,适当修型再转化为3D图纸为我所用。修改后配重也可用3D打印机做出样品,这种3D模型外观上、结构上与设计实体完全一致,非常直观。 三、3D打印技术应用的局限性 D打印结合了智能制造技术、自动控制技术和新材料技术,确实具备了引领第三次工业革命的有利条件,但是3D打印技术的大规模工业化应用还存在诸多限制。首先3D打印的材料品种单一,价格也过于昂贵,用于打印样品则可,用于批量生产则经济上决不可行;其次从效率上来说,采用3D打印技术工艺生产一个零件还是过于浪费时间了;其三,3D打印零部件的内在质量可靠性仍然存疑。采用金属粉末加上激光烧结和激光熔覆技术打印的零部件,其内在金属结构与采用现代冶金技术生产的零部件,在组织的致密性、结合的紧密性方面差距很大,影响3D打印零部件的结构强度。因此3D打印技术现阶 公文格式规范与常见公文写作 一、公文概述与公文格式规范 党政机关公文种类的区分、用途的确定及格式规范等,由中共中央办公厅、国务院办公厅于2012年4月16日印发,2012年7月1日施行的《党政机关公文处理工作条例》规定。之前相关条例、办法停止执行。 (一)公文的含义 公文,即公务文书的简称,属应用文。 广义的公文,指党政机关、社会团体、企事业单位,为处理公务按照一定程序而形成的体式完整的文字材料。 狭义的公文,是指在机关、单位之间,以规范体式运行的文字材料,俗称“红头文件”。 ?(二)公文的行文方向和原则 ?、上行文下级机关向上级机关行文。有“请示”、“报告”、和“意见”。 ?、平行文同级机关或不相隶属机关之间行文。主要有“函”、“议案”和“意见”。 ?、下行文上级机关向下级机关行文。主要有“决议”、“决定”、“命令”、“公报”、“公告”、“通告”、“意见”、“通知”、“通报”、“批复”和“会议纪要”等。 ?其中,“意见”、“会议纪要”可上行文、平行文、下行文。?“通报”可下行文和平行文。 ?原则: ?、根据本机关隶属关系和职权范围确定行文关系 ?、一般不得越级行文 ?、同级机关可以联合行文 ?、受双重领导的机关应分清主送机关和抄送机关 ?、党政机关的部门一般不得向下级党政机关行文 ?(三) 公文的种类及用途 ?、决议。适用于会议讨论通过的重大决策事项。 ?、决定。适用于对重要事项作出决策和部署、奖惩有关单位和人员、变更或撤销下级机关不适当的决定事项。 ?、命令(令)。适用于公布行政法规和规章、宣布施行重大强制性措施、批准授予和晋升衔级、嘉奖有关单位和人员。 ?、公报。适用于公布重要决定或者重大事项。 ?、公告。适用于向国内外宣布重要事项或者法定事项。 ?、通告。适用于在一定范围内公布应当遵守或者周知的事项。?、意见。适用于对重要问题提出见解和处理办法。 ?、通知。适用于发布、传达要求下级机关执行和有关单位周知或者执行的事项,批转、转发公文。 ?、通报。适用于表彰先进、批评错误、传达重要精神和告知重要情况。 ?、报告。适用于向上级机关汇报工作、反映情况,回复上级机关的询问。 ?、请示。适用于向上级机关请求指示、批准。 ?、批复。适用于答复下级机关请示事项。 ?、议案。适用于各级人民政府按照法律程序向同级人民代表大会或者人民代表大会常务委员会提请审议事项。 ?、函。适用于不相隶属机关之间商洽工作、询问和答复问题、请求批准和答复审批事项。 ?、纪要。适用于记载会议主要情况和议定事项。?(四)、公文的格式规范 ?、眉首的规范 ?()、份号 ?也称编号,置于公文首页左上角第行,顶格标注。“秘密”以上等级的党政机关公文,应当标注份号。 ?()、密级和保密期限 ?分“绝密”、“机密”、“秘密”三个等级。标注在份号下方。?()、紧急程度 ?分为“特急”和“加急”。由公文签发人根据实际需要确定使用与否。标注在密级下方。 ?()、发文机关标志(或称版头) ?由发文机关全称或规范化简称加“文件”二字组成。套红醒目,位于公文首页正中居上位置(按《党政机关公文格式》标准排 3D打印技术学习心得文件排版存档编号:[UYTR-OUPT28-KBNTL98-UYNN208] “3D打印与激光再制造技术”学习心得 张向军 一、对3D打印技术的基本认知 3D打印就是将计算机中设计的三维模型分层,得到许多二维平面图形,再利用各种材质的粉末或熔融的塑料或其它材料逐层打印这些二维图形,堆叠成为三维实体。作为3D打印技术包括了三维模型的建模、机械及其自动控制(机电一体化)、模型分层并转化为打印指令代码软件等技术。 不远的将来,我们完全可以用电脑把自己想要的东西设计出来,然后进行三维打印,就像我们现在可以在线编辑文档一样。通过电子设计文件或设计蓝图,3D打印技术将会把数字信息转化为实体物品。当然,这还不是3D打印的全部,3D打印最具魔力的地方是,它将给材料科学、生物科学带来翻天覆地的变化,最终的结果是科学技术和创新呈现爆发式的变革。 3D打印最直接的效益,就是对材料行业的拉动,随着3D产业规模的扩大,必将推动材料行业的不断变革。 3D打印机本是一种相对粗陋的机器,从工厂诞生,然后走进了家庭、企业、学校、厨房、医院、甚至时尚T台。但是一旦将3D打印机与当今发达的数字技术相结合,奇迹就发生了。再加上互联网的普及以及微小而成本低廉的电子电路的广泛使用,在材料科学和生物技术取得日新月异进步的今天,3D打印应用前景简直令人不可想象! 二、3D打印的应用前景 有了3D激光扫描仪,我们可以从市场上采购造型优美的后配重成品,采用逆向工程技术进行3D扫描后,适当修型再转化为3D图纸为我所用。修改后配重也可用 3D打印机做出样品,这种3D模型外观上、结构上与设计实体完全一致,非常直观。 三、3D打印技术应用的局限性 3D打印结合了智能制造技术、自动控制技术和新材料技术,确实具备了引领第三次工业革命的有利条件,但是3D打印技术的大规模工业化应用还存在诸多限制。首先3D打印的材料品种单一,价格也过于昂贵,用于打印样品则可,用于批量生产则经济上决不可行;其次从效率上来说,采用3D打印技术工艺生产一个零件还是过于浪费时间了;其三,3D打印零部件的内在质量可靠性仍然存疑。采用金属粉末加上激光烧结和激光熔覆技术打印的零部件,其内在金属结构与采用现代冶金技术生产的零部件,在组织的致密性、结合的紧密性方面差距很大,影响3D打印零部件的结构强度。因此3D打印技术现阶段真正的实际应用,仅仅在产品研发过程的样品制造、工业生产中的砂型制造、生物医学上的器官仿生等方面较为成熟。那些3D技术打印的裙子、鞋子不过是艺术家自娱自乐的哗众取宠罢了。至于劳心费力的捣鼓出一个3D打印的水果,除了追求标新立异之外没有任何现实意义! 四、对我国发展3D产业技术的几点思考 3D技术产业化发展有其局限性,存在诸多急需突破的技术瓶颈,能否真正引领第三次工业革命浪潮尚无定论。但3D 打印毕竟是代表制造技术发展方向的前沿技术,从国家层面还是要提前谋划、提前布局、紧密跟踪。首先,要从小学阶段开始在学校普及3D打印知识教育,让孩子们从小接触3D打印技术,有利于培养他们的动手能力和创新精神。桌面级3D打印机价格已经非常便宜,完全具备全面推广条件。其次,着力培养大型3D打印和扫描技术企业,组建3D产业技术国家队,壮大企业实力,参与国际竞争。目前我国3D制造企业规模偏小,实力不强,大多数在破产的边缘苦苦挣扎,很难与国外的大公司展开真正意义上的竞争,因此政府主导的 ctex宏包说明 https://www.360docs.net/doc/77827389.html,? 版本号:v1.02c修改日期:2011/03/11 摘要 ctex宏包提供了一个统一的中文L A T E X文档框架,底层支持CCT、CJK和xeCJK 三种中文L A T E X系统。ctex宏包提供了编写中文L A T E X文档常用的一些宏定义和命令。 ctex宏包需要CCT系统或者CJK宏包或者xeCJK宏包的支持。主要文件包括ctexart.cls、ctexrep.cls、ctexbook.cls和ctex.sty、ctexcap.sty。 ctex宏包由https://www.360docs.net/doc/77827389.html,制作并负责维护。 目录 1简介2 2使用帮助3 2.1使用CJK或xeCJK (3) 2.2使用CCT (3) 2.3选项 (4) 2.3.1只能用于文档类的选项 (4) 2.3.2只能用于文档类和ctexcap.sty的选项 (4) 2.3.3中文编码选项 (4) 2.3.4中文字库选项 (5) 2.3.5CCT引擎选项 (5) 2.3.6排版风格选项 (5) 2.3.7宏包兼容选项 (6) 2.3.8缺省选项 (6) 2.4基本命令 (6) 2.4.1字体设置 (6) 2.4.2字号、字距、字宽和缩进 (7) ?https://www.360docs.net/doc/77827389.html, 1 1简介2 2.4.3中文数字转换 (7) 2.5高级设置 (8) 2.5.1章节标题设置 (9) 2.5.2部分修改标题格式 (12) 2.5.3附录标题设置 (12) 2.5.4其他标题设置 (13) 2.5.5其他设置 (13) 2.6配置文件 (14) 3版本更新15 4开发人员17 1简介 这个宏包的部分原始代码来自于由王磊编写cjkbook.cls文档类,还有一小部分原始代码来自于吴凌云编写的GB.cap文件。原来的这些工作都是零零碎碎编写的,没有认真、系统的设计,也没有用户文档,非常不利于维护和改进。2003年,吴凌云用doc和docstrip工具重新编写了整个文档,并增加了许多新的功能。2007年,oseen和王越在ctex宏包基础上增加了对UTF-8编码的支持,开发出了ctexutf8宏包。2009年5月,我们在Google Code建立了ctex-kit项目1,对ctex宏包及相关宏包和脚本进行了整合,并加入了对XeT E X的支持。该项目由https://www.360docs.net/doc/77827389.html,社区的开发者共同维护,新版本号为v0.9。在开发新版本时,考虑到合作开发和调试的方便,我们不再使用doc和docstrip工具,改为直接编写宏包文件。 最初Knuth设计开发T E X的时候没有考虑到支持多国语言,特别是多字节的中日韩语言。这使得T E X以至后来的L A T E X对中文的支持一直不是很好。即使在CJK解决了中文字符处理的问题以后,中文用户使用L A T E X仍然要面对许多困难。最常见的就是中文化的标题。由于中文习惯和西方语言的不同,使得很难直接使用原有的标题结构来表示中文标题。因此需要对标准L A T E X宏包做较大的修改。此外,还有诸如中文字号的对应关系等等。ctex宏包正是尝试着解决这些问题。中间很多地方用到了在https://www.360docs.net/doc/77827389.html,论坛上的讨论结果,在此对参与讨论的朋友们表示感谢。 ctex宏包由五个主要文件构成:ctexart.cls、ctexrep.cls、ctexbook.cls和ctex.sty、ctexcap.sty。ctex.sty主要是提供整合的中文环境,可以配合大多数文档类使用。而ctexcap.sty则是在ctex.sty的基础上对L A T E X的三个标准文档类的格式进行修改以符合中文习惯,该宏包只能配合这三个标准文档类使用。ctexart.cls、ctexrep.cls、ctexbook.cls则是ctex.sty、ctexcap.sty分别和三个标准文档类结合产生的新文档类,除了包含ctex.sty、ctexcap.sty的所有功能,还加入了一些修改文档类缺省设置的内容(如使用五号字体为缺省字体)。 1https://www.360docs.net/doc/77827389.html,/p/ctex-kit/ 学生会文档书写格式规范要求 目前各部门在日常文书编撰中大多按照个人习惯进行排版,文档中字体、文字大小、行间距、段落编号、页边距、落款等参数设置不规范,严重影响到文书的标准性和美观性,以下是文书标准格式要求及日常文档书写注意事项,请各部门在今后工作中严格实行: 一、文件要求 1.文字类采用Word格式排版 2.统计表、一览表等表格统一用Excel格式排版 3.打印材料用纸一般采用国际标准A4型(210mm×297mm),左侧装订。版面方向以纵向为主,横向为辅,可根据实际需要确定 4.各部门的职责、制度、申请、请示等应一事一报,禁止一份行文内同时表述两件工作。 5.各类材料标题应规范书写,明确文件主要内容。 二、文件格式 (一)标题 1.文件标题:标题应由发文机关、发文事由、公文种类三部分组成,黑体小二号字,不加粗,居中,段后空1行。 (二)正文格式 1. 正文字体:四号宋体,在文档中插入表格,单元格内字体用宋体,字号可根据内容自行设定。 2.页边距:上下边距为2.54厘米;左右边距为 3.18厘米。 3.页眉、页脚:页眉为1.5厘米;页脚为1.75厘米; 4.行间距:1.5倍行距。 5.每段前的空格请不要使用空格,应该设置首先缩进2字符 6.年月日表示:全部采用阿拉伯数字表示。 7.文字从左至右横写。 (三)层次序号 (1)一级标题:一、二、三、 (2)二级标题:(一)(二)(三) (3)三级标题:1. 2. 3. (4)四级标题:(1)(2)(3) 注:三个级别的标题所用分隔符号不同,一级标题用顿号“、”例如:一、二、等。二级标题用括号不加顿号,例如:(三)(四)等。三级标题用字符圆点“.”例如:5. 6.等。 (四)、关于落款: 1.对外行文必须落款“湖南环境生物专业技术学院学生会”“校学生会”各部门不得随意使用。 2.各部门文件落款需注明组织名称及部门“湖南环境生物专业技术学院学生会XX部”“校学生会XX部” 3.所有行文落款不得出现“环境生物学院”“湘环学院”“学生会”等表述不全的简称。 4.落款填写至文档末尾右对齐,与前一段间隔2行 5.时间落款:文档中落款时间应以“2016年5月12日”阿拉伯数字 3D打印技术学习心得体会总结范文 3D打印技术又称三维打印技术,是一种以数字模型文件为基础,通过逐层打印的方式来构造物体的技术。下面就让X给大家分享几篇3D打印技术学习心得体会吧,希望能对你有帮助! 3D打印技术学习心得体会篇一 3D打印是一种通过材料逐层添加制造三维物体的变革性、数字化增材制造技术,它将信息、材料、生物、控制等技术融合渗透,将对未来制造业生产模式与人类生活方式产生重要影响。3D打印这种智能制造的逻辑轨迹是控制物质的形状、控制物质的构成和控制行为,也就是实现物体在结构、材料和活性上的有机统一。 越来越多的迹象表明,3D打印技术已经从实验室和工厂逐渐走出来了,并且走进学校和家庭,与我们每个普通人的生活息息相关。这样会极大地冲击我们的工作方式,或者增强很多行业的生命力。然而我们必须清 醒地意识到3D打印技术也会催生大量前所未有的行业和巨大机遇,我们必须牢牢的抓住机遇,因为第三次工业革命的序幕已经拉开。 目前3D印刷技术已深入到了各行各业,给人以耳目一新之感。 3D印刷术被看作是信息时代印刷术的一次惊人的飞跃,有人将它与开启工业革命的蒸汽机相提并论。3D打印机能做:艺术品、精密零件制造业、家庭装饰、建筑、食品和服装、人偶玩具、珠宝、医学、产品原型等。 关于3D打印技术的几点思考,在信息技术飞速发展的今天,我校的教学也应该与时俱进,例如在我们动漫专业的设计当中引入3D打印技术,电子创新班的产品设计中引入3D打印技术,在通信维修综合布线中引入3D印刷技术,机器人设计中引入3D打印技术等等,将对我们的学生更加有学习的成就感。3D打印技术学习心得体会篇二通过为期两周的3D打印技术的培训与学习,对3D打印技术有了更深刻的认识和理解。现将两周(12月4号-13号)的学习心 政府公文写作格式 一、眉首部分 (一)发文机关标识 平行文和下行文的文件头,发文机关标识上边缘至上页边为62mm,发文机关下边缘至红色反线为28mm。 上行文中,发文机关标识上边缘至版心上边缘为80mm,即与上页边距离为117mm,发文机关下边缘至红色反线为30mm。 发文机关标识使用字体为方正小标宋_GBK,字号不大于22mm×15mm。 (二)份数序号 用阿拉伯数字顶格标识在版心左上角第一行,不能少于2位数。标识为“编号000001” (三)秘密等级和保密期限 用3号黑体字顶格标识在版心右上角第一行,两字中间空一字。如需要加保密期限的,密级与期限间用“★”隔开,密级中则不空字。 (四)紧急程度 用3号黑体字顶格标识在版心右上角第一行,两字中间空一字。如同时标识密级,则标识在右上角第二行。 (五)发文字号 标识在发文机关标识下两行,用3号方正仿宋_GBK字体剧 中排布。年份、序号用阿拉伯数字标识,年份用全称,用六角括号“〔〕”括入。序号不用虚位,不用“第”。发文字号距离红色反线4mm。 (六)签发人 上行文需要标识签发人,平行排列于发文字号右侧,发文字号居左空一字,签发人居右空一字。“签发人”用3号方正仿宋_GBK,后标全角冒号,冒号后用3号方正楷体_GBK标识签发人姓名。多个签发人的,主办单位签发人置于第一行,其他从第二行起排在主办单位签发人下,下移红色反线,最后一个签发人与发文字号在同一行。 二、主体部分 (一)标题 由“发文机关+事由+文种”组成,标识在红色反线下空两行,用2号方正小标宋_GBK,可一行或多行居中排布。 (二)主送机关 在标题下空一行,用3号方正仿宋_GBK字体顶格标识。回行是顶格,最后一个主送机关后面用全角冒号。 (三)正文 主送机关后一行开始,每段段首空两字,回行顶格。公文中的数字、年份用阿拉伯数字,不能回行,阿拉伯数字:用3号Times New Roman。正文用3号方正仿宋_GBK,小标题按照如下排版要求进行排版: 与D打E卩与激光再制造技术〃学习心得 张向军 一、对3D打印技术的基本认知 3D打印就是将计算机中设计的三维模型分层,得到许多二维平面图形,再利用各种材质的粉末或熔融的塑料或其它材料逐层打印这些二维图形,堆叠成为三维实体。作为3D打印技术包括了三维模型的建模、机械及其自动控制(机电一体化)、模型分层并转化为打印指令代码软件等技术。 不远的将来,我们完全可以用电脑把自己想要的东西设计出来,然后进行三维打印,就像我们现在可以在线编辑文档一样。通过电子设计文件或设计蓝图,3D打印技术将会把数字信息转化为实体物品。当然,这还不是3D打印的全部,3D打印最具魔力的地方是,它将给材料科学、生物科学带来翻天覆地的变化,最终的结果是科学技术和创新呈现爆发式的变革。 3D打印最直接的效益,就是对材料行业的拉动,随着3D产业规模的扩大,必将推动材料行业的不断变革。 3D打印机本是一种相对粗陋的机器,从工厂诞生,然后走进了家庭、企业、学校、厨房、医院、甚至时尚T台。但是一旦将3D打印机与当今发达的数字技术相结合,奇迹就发生了。再加上互联网的普及以及微小而成本低廉的电子电路的广泛使用,在材料科学和生物技术取得日新月异进步的今天,3D打印应用前景简直令人不可想象! 二、3D打印的应用前景 有了3D激光扫描仪,我们可以从市场上采购造型优美的后配重成品,采用逆向工程技术进行3D扫描后,适当修型再转化为3D图纸为我所用。修改后配重也可用3D打印机做出样品,这种3D模型外观上、结构上与设计实体完全一致,非常直观。 三、3D打印技术应用的局限性 3D打印结合了智能制造技术、自动控制技术和新材料技术,确实具备了引领第三次工业革命的有利条件,但是3D打印技术的大规模工业化应用还存在诸多限制。首先3D打印的材料品种单一,价格也过于昂贵,用于打印样品则可,用于批量生产则经济上决不可行;其次从效率上来说,采用3D打印技术工艺生产一个零件还是过于浪费时间了;其三,3D打印零部件的内在质量可靠性仍然存疑。釆用金属粉末加上激光烧结和激光熔覆技术打印的零部件,其内在金属结构与采用现代冶金技术生产的零部件,在组织的致密性、结合的紧密性方面差距很大,影响3D 打印零部件的结构强度。因此3D打印技术现阶段真正的实际应用,仅仅在产品研发过程的样品制造、工业生产中的砂型制造、生物医学上的器官仿生等方面较为成熟。那些3D技术打印的裙子、鞋子不过是艺术家自娱自乐的哗众取宠罢了。至于劳心费力的捣鼓出一个3D打印的水果,除了追求标新立异之外没有任何现实意义! 四、对我国发展3D产业技术的几点思考 3D技术产业化发展有其局限性,存在诸多急需突破的技术瓶颈,能否真正引领第三次工业革命浪潮尚无定论。但3D打印毕竟是代表制造技术 tabularx宏包中改变弹性列的宽度\hsize 分类:latex 2012-03-07 21:54 12人阅读评论(0) 收藏编辑删除 \documentclass{article} \usepackage{amsmath} \usepackage{amssymb} \usepackage{latexsym} \usepackage{CJK} \usepackage{tabularx} \usepackage{array} \newcommand{\PreserveBackslash}[1]{\let \temp =\\#1 \let \\ = \temp} \newcolumntype{C}[1]{>{\PreserveBackslash\centering}p{#1}} \newcolumntype{R}[1]{>{\PreserveBackslash\raggedleft}p{#1}} \newcolumntype{L}[1]{>{\PreserveBackslash\raggedright}p{#1}} \begin{document} \begin{CJK*}{GBK}{song} \CJKtilde \begin{tabularx}{10.5cm}{|p{3cm} |>{\setlength{\hsize}{.5\hsize}\centering}X |>{\setlength{\hsize}{1.5\hsize}}X|} %\hsize是自动计算的列宽度,上面{.5\hsize}与{1.5\hsize}中的\hsize前的数字加起来必须等于表格的弹性列数量。对于本例,弹性列有2列,所以“.5+1.5=2”正确。 %共3列,总列宽为10.5cm。第1列列宽为3cm,第3列的列宽是第2列列宽的3倍,其宽度自动计算。第2列文字左右居中对齐。注意:\multicolum命令不能跨越X列。 \hline 聪明的鱼儿在咬钩前常常排祠再三& 这是因为它们要荆断食物是否安全&知果它们认为有危险\\ \hline 它们枕不会吃& 如果它们判定没有危险& 它们就食吞钩\\ \hline 一眼识破诱饵的危险,却又不由自主地去吞钩的& 那才正是人的心理而不是鱼的心理& 是人的愚合而不是鱼的恳奋\\3D打印技术学习心得

公文写作规范格式

3d打印实习感悟心得

关于会议纪要的规范格式和写作要求

titlesec宏包使用手册

3D打印技术学习心得

毕业论文写作要求与格式规范

3D打印技术学习心得

公文格式规范与常见公文写作

3D打印技术学习心得

ctex 宏包说明 ctex

文档书写格式规范要求

3D打印技术学习心得体会总结范文

政府公文写作格式规范

3D打印技术学习心得

tabularx宏包中改变弹性列的宽度