SIFT算法英文详解

SIFT: Scale Invariant Feature Transform

The algorithm

SIFT is quite an involved algorithm. It has a lot going on and can be come confusing, So I’ve split up the entire algorithm into multiple parts. Here’s an outline of what happens in SIFT. Constructing a scale space

This is the initial preparation. You create internal representations of the original image to ensure scale invariance. This is done by generating a “scale space”.

LoG Approximation

The Laplacian of Gaussian is great for finding interesting points (or key points) in an image. But it’s computationally expensive. So we cheat and approximate it using the representation created earlier.

Finding keypoints

With the super fast approximation, we now try to find key points. These are maxima and minima in the Difference of Gaussian image we calculate in step 2

Get rid of bad key points

Edges and low contrast regions are bad keypoints. Eliminating these makes the algorithm efficient and robust. A technique similar to the Harris Corner Detector is used here.

Assigning an orientation to the keypoints

An orientation is calculated for each key point. Any further calculations are done relative to this orientation. This effectively cancels out the effect of orientation, making it rotation invariant. Generate SIFT features

Finally, with scale and rotation invariance in place, one more representation is generated. This helps uniquely identify features. Lets say you have 50,000 features. With this representation, you can easily identify the feature you’re looking for (sa y, a particular eye, or a sign board).

That was an overview of the entire algorithm. Over the next few days, I’ll go through each step in detail. Finally, I’ll show you how to implement SIFT in OpenCV!

What do I do with SIFT features?

After you run through the algorithm, you’ll have SIFT features for your image. Once you have these, you can do whatever you want.

Track images, detect and identify objects (which can be partly hidden as well), or whatever you can think of. We’ll get into this later as well.

But the catch is, this algorithm is patented.

>.<

So, it’s good enough for academic purposes. But if you’re looking to make something commercial, look for something else! [Thanks to aLu for pointing out SURF is patented too]

1. Constructing a scale space

Real world objects are meaningful only at a certain scale. You might see a sugar cube perfectly on a table. But if looking at the entire milky way, then it simply does not exist. This multi-scale nature of objects is quite common in nature. And a scale space attempts to replicate this concept

on digital images.

Scale spaces

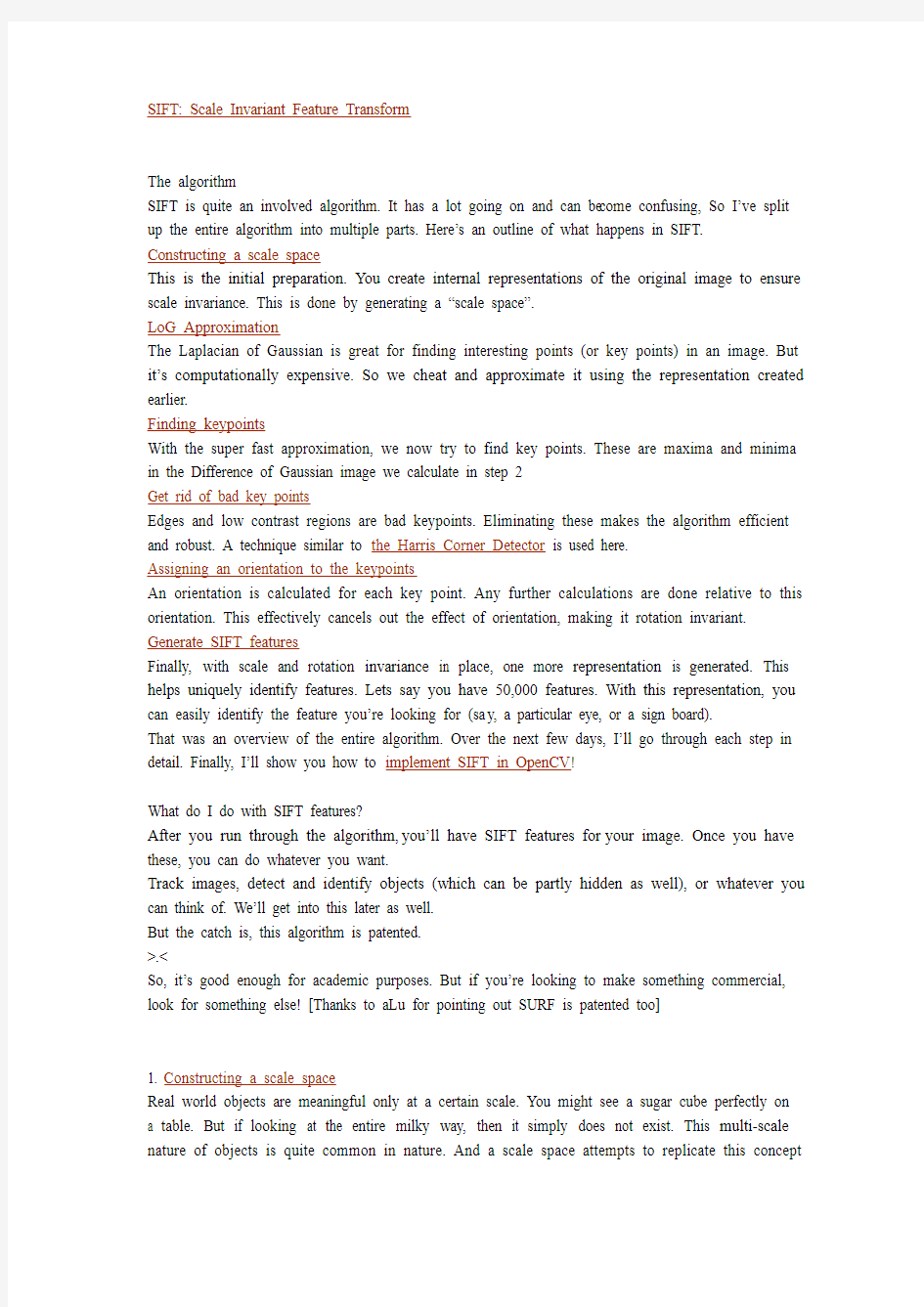

Do you want to look at a leaf or the entire tree? If it’s a tree, get rid of some detail from the image (like the leaves, twigs, etc) intentionally.

While getting rid of these details, you must ensure that you do not introduce new false details. The only way to do that is with the Gaussian Blur (it was proved mathematically, under several reasonable assumptions).

So to create a scale space, you take the original image and generate progressively blurred out images. Here’s an example:

Look at how the cat’s helmet loses detail. So do it’s whiskers.

Scale spaces in SIFT

SIFT takes scale spaces to the next level. You take the original image, and generate progressively blurred out images. Then, you resize the original image to half size. And you generate blurred out images again. And you keep repeating.

Here’s what it would look like in SIFT:

Images of the same size (vertical) form an octave. Above are four octaves. Each octave has 5 images. The individual images are formed because of the increasing “scale” (the amount of blur).

The technical details

Now that you know things the intuitive way, I’ll get into a few technical details.

Octaves and Scales

The number of octaves and scale depends on the size of the original image. While programming SIFT, you’ll have to decide for yourself how many octaves and scales you want. However, the creator of SIFT suggests that 4 octaves and 5 blur levels are ideal for the algorithm.

The first octave

If the original image is doubled in size and antialiased a bit (by blurring it) then the algorithm produces more four times more keypoints. The more the keypoints, the better!

Blurring

Mathematically, “blurring” is referred to as the convolution of the gaussian operator and the image. Gaussian blur has a particular expression or “operator” that is applied to each pixel. What results is the blurred image.

The symbols:

L is a blurred image

G is the Gaussian Blur operator

I is an image

x,y are the location coordinates

σ is the “scale” para meter. Think of it as the amount of blur. Greater the value, greater the blur. The * is the convolution operation in x and y. It “applies” gaussian blur G onto the image I.

This is the actual Gaussian Blur operator.

Amount of blurring

The amount of blurring in each image is important. It goes like this. Assume the amount of blur in a particular image is σ. Then, the amount of blur in the next image will be k*σ. Here k is whatever constant you choose.

This is a table of σ’s for my current example. See how each σ differs by a fa ctor sqrt(2) from the previous one.

Summary

In the first step of SIFT, you generate several octaves of the original image. Each octave’s image size is half the previous one. Within an octave, images are progressively blurred using the Gaussian Blur operator.

In the next step, we’ll use all these octaves to generate Difference of Gaussian images.

2. LoG Approximation

In the previous step , we created the scale space of the image. The idea was to blur an image progressively, shrink it, blur the small image progressively and so on. Now we use those blurred images to generate another set of images, the Difference of Gaussians (DoG). These DoG images are a great for finding out interesting key points in the image.

Laplacian of Gaussian

The Laplacian of Gaussian (LoG) operation goes like this. You take an image, and blur it a little. And then, you calculate second order derivatives on it (or, the “laplacian”). This locates edges and corners on the image. These edges and corners are good for finding keypoints.

But the second order derivative is extremely sensitive to noise. The blur smoothes it out the noise and stabilizes the second order derivative.

The problem is, calculating all those second order derivatives is computationally intensive. So we cheat a bit.

The Con

To generate Laplacian of Guassian images quickly, we use the scale space. We calculate the difference between two consecutive scales. Or, the Difference of Gaussians. Here’s how:

These Difference of Gaussian images are approximately equivalent to the Laplacian of Gaussian. And we’ve replaced a computationally intensive process with a simple subtraction (fast and efficient). Awesome!

These DoG images comes with another little goodie. These approximations are also “scale invariant”. What does that mean?

The Benefits

Just the Laplacian of Gaussian images aren’t great. They are not scale invariant. That is, they depend on the amount of bl ur you do. This is because of the Gaussian expression. (Don’t panic

)

See the σ2 in the demonimator? That’s the scale. If we somehow get rid of it, we’ll have true scale independence. So, if the laplacian of a gaussian is represented like this:

Then the scale invariant laplacian of gaussian would look like this:

But all these complexities are taken care of by the Difference of Gaussian operation. The resultant images after the DoG operation are already multiplied by the σ2. Great eh!

Oh! And it has also been proved that this scale invariant thingy produces much better trackable points! Even better!

Side effects

You can’t have benefits without side effects >.<

You know the DoG result is multiplied with σ2. But it’s also multiplied by another number. That number is (k-1). This is the k we discussed in the previous step.

But we’ll just be looking for the location of the maximums and minimums in the images. We’ll never check the actual values at those locations. So, this additional factor won’t be a problem to us. (Even if you multiply throughout by some constant, the maxima and minima stay at the same location)

Example

Here’s a gigantic image to demonstrate how this difference of Gaussians works.

3. Finding keypoints

Up till now, we have generated a scale space and used the scale space to calculate the Difference of Gaussians. Those are then used to calculate Laplacian of Gaussian approximations that is scale invariant. I told you that they produce great key points. Here’s how it’s done!

Finding key points is a two part process

Locate maxima/minima in DoG images

Find subpixel maxima/minima

Locate maxima/minima in DoG images

The first step is to coarsely locate the maxima and minima. This is simple. You iterate through each pixel and check all it’s neighbours. The check is done within the current image, and also the one above and below it. Something like this:

X marks the current pixel. The green circles mark the neighbours. This way, a total of 26 checks are made. X is marked as a “key point” if it is the greatest or least of all 26 neighbours. Usually, a non-maxima or non-minima position won’t have to go through all 26 checks. A few initial checks will usually sufficient to discard it.

Note that keypoints are not detected in the lowermost and topmost scal es. There simply aren’t enough neighbours to do the comparison. So simply skip them!

Once this is done, the marked points are the approximate maxima and minima. They are “approximate” because the maxima/minima almost never lies exactly on a pixel. It lies somewhere between the pixel. But we simply cannot access data “between” pixels. So, we must mathematically locate the subpixel location.

Here’s what I mean:

The red crosses mark pixels in the image. But the actual extreme point is the green one.

Find subpixel maxima/minima

Using the available pixel data, subpixel values are generated. This is done by the Taylor expansion of the image around the approximate key point.

Mathematically, it’s like this:

We can easily find the extreme points of this equation (differentiate and equate to zero). On solving, we’ll get subpixel key point locations. These subpixel values increase chances of matching and stability of the algorithm.

Example

Here’s a result I got from the example image I’ve been using till now:

Assigning an orientation to the keypoints

The author of SIFT recommends generating two such extrema images. So, you need exactly 4

DoG images. To generate 4 DoG images, you need 5 Gaussian blurred images. Hence the 5 level of blurs in each octave.

In the image, I’ve shown just one octave. This is done for all octaves. Also, this image just sh ows the first part of keypoint detection. The Taylor series part has been skipped.

Summary

Here, we detected the maxima and minima in the DoG images generated in the previous step. This is done by comparing neighbouring pixels in the current scale, the sca le “above” and the scale “below”.

Next, we’ll reject some keypoints detected here. This is because they either don’t have enough contrast or they lie on an edge

4. Get rid of bad key points

Key points generated in the previous step produce a lot of key points. Some of them lie along an edge, or they don’t have enough contrast. In b oth cases, they are not useful as features. So we get rid of them. The approach is similar to the one used in the Harris Corner Detector for removing edge features. For low contrast features, we simply check their intensities.

Removing low contrast features

This is simple. If the magnitude of the intensity (i.e., without sign) at the current pixel in the DoG image (that is being checked for minima/maxima) is less than a certain value, it is rejected. Because we have subpixel keypoints (we used the Taylor expansion to refine keypoints), we again need to use the taylor expansion to get the intensity value at subpixel locations. If it’s magnitude is less than a certain value, we reject the keypoint.

Removing edges

The idea is to calculate two gradients at the keypoint. Both perpendicular to each other. Based on the image around the keypoint, three possibilities exist. The image around the keypoint can be:

A flat region

If this is the case, both gradients will be small.

An edge

Here, one gradient will be big (perpendicular to the edge) and the other will be small (along the edge)

A “corner”

Here, both gradients will be big.

Corners are great keypoints. So we want just corners. If both gradients are big enough, we let it pass as a key point. Otherwise, it is rejected.

Mathematically, this is achieved by the Hessian Matrix. Using this matrix, you can easily check if a point is a corner or not.

If you’re interested in the math, first check the posts on the Harris corner detector. A lot of the same math used used in SIFT. In the Harris Corner Detector, two eigenvalues are calculated. In SIFT, efficiency is increased by just calculating the ratio of these two eigenvalues. You never need to calculate the actual eigenvalues.

Example

Here’s a visual example of what happens in this step:

Both extrema images go through the two tests: the contrast test and the edge test. They reject a few keypoints (sometimes a lot) and thus, we’re left with a lower number of keypoints to deal with.

Summary

In this step, the number of keypoints was reduced. This helps increase efficiency and also the robustness of the algorithm. Keypoints are rejected if they had a low contrast or if they were located on an edge.

In the next step we’ll assign an orientation to all the keypoints that passed both tests.

5. Assigning an orientation to the keypoints

After step 4, we have legitimate key points. They’ve been tested to be stable. We already know the scale at which the keypoint was detected (it’s the same as the scale of the blurred image). So we

have scale invariance. The next thing is to assign an orientation to each keypoint. This orientation

provides rotation invariance. The more invariance you have the better it is.

The idea

The idea is to collect gradient directions and magnitudes around each keypoint. Then we figure

out the most prominent orientation(s) in that region. And we assign this orientation(s) to the

keypoint.

Any later calculations are done relative to this orientation. This ensures rotation invariance.

The size of the “orientation collection region” around the keypoint depends on it’s scale. The bigger the scale, the bigger the collection region.

The details

Now for the little details about collecting orientations.

Gradient magnitudes and orientations are calculated using these formulae:

The magnitude and orientation is calculated for all pixels around the keypoint. Then, A histogram is created for this.

In this histogram, the 360 degrees of orientation are broken into 36 bins (each 10 degrees). Lets say the gradient direction at a certain point (in the “orientation collection region”) is 18.759 degrees, then it will go into the 10-19 degree bin. And the “amount” that is added to the bin is proportional to the magnitude of gradient at that point.

Once you’ve done this for all pixels around the keypoint, the histogram will have a peak at some point.

Above, you see the histogram peaks at 20-29 degrees. So, the keypoint is assigned orientation 3 (the third bin)

Also, any peaks above 80% of the highest peak are converted into a new keypoint. This new keypoint has the same location and scale as the original. But it’s orientation is equal to the other

peak.

So, orientation can split up one keypoint into multiple keypoints.

The Technical Details

Magnitudes

Saw the gradient magnitude image above? In SIFT, you need to blur it by an amount of 1.5*sigma. Size of the window

The window size, or the “orientation collection region”, is equal to the size of the kernel for Gaussian Blur of amount 1.5*sigma.

Summary

To assign an orientation we use a histogram and a small region around it. Using the histogram, the most prominent gradient orientation(s) are identified. If there is only one peak, it is assigned to the keypoint. If there are multiple peaks above the 80% mark, they are all converted into a new keypoint (with their respective orientations).

Next, we generate a highly distinctive “fingerprint” for each keypoint. Here’s a little teaser. This fingerprint, or “feature vector”, has 128 different numbers.

6. Generate SIFT features

Now for the final step of SIFT. Till now, we had scale and rotation invariance. Now we create a fingerprint for each keypoint. This is to identify a keypoint. If an eye is a keypoint, then using this finge rprint, we’ll be able to distinguish it from other keypoints, like ears, noses, fingers, etc.

The idea

We want to generate a very unique fingerprint for the keypoint. It should be easy to calculate. We also want it to be relatively lenient when it is being compared against other keypoints. Things are never EXACTL Y same when comparing two different images.

To do this, a 16×16 window around the keypoint. This 16×16 window is broken into sixteen 4×4 windows.

Within each 4×4 window, gradient magnitudes and orientations are calculated. These orientations are put into an 8 bin histogram.

Any gradient orientation in the range 0-44 degrees add to the first bin. 45-89 add to the next bin. And so on.And (as always) the amount added to the bin depends on the magnitude of the gradient. Unlike the past, the amount added also depends on the distance from the keypoint. So gradients that are far away from the keypoint will add smaller values to the histogram.

This is done u sing a “gaussian weighting function”. This function simply generates a gradient (it’s like a 2D bell curve). You multiple it with the magnitude of orientations, and you get a weighted thingy. The farther away, the lesser the magnutide.

Doing this for all 16 pixels, you would’ve “compiled” 16 totally random orientations into 8 predetermined bins. You do this for all sixteen 4×4 regions. So you end up with 4x4x8 = 128

numbers. Once you have all 128 numbers, you normalize them (just like you would normalize a vector in school, divide by root of sum of squares). These 128 numbers form the “feature vector”. This keypoint is uniquely identified by this feature vector.

You might have seen that in the pictures above, the keypoint lies “in between”. It does not lie exactly on a pixel. That’s because it does not. The 16×16 window takes orientations and magnitudes of the image “in-between” pixels. So you need to interpolate the image to generate orientation and magnitude data “in between” pixels.

Problems

This feature vector introduces a few complications. We need to get rid of them before finalizing the fingerprint.

Rotation dependence

The feature vector uses gradient orientations. Clearly, if you rotate the image, everything changes. All gradient orientations also change. To achieve rotation independence, the keypoint’s rotation is subtracted from each orientation. Thus each gradient orientation is relative to the keypoint’s orientation.

Illumination dependence

If we threshold numbers that are big, we can achieve achieve illumination independence. So, any number (of the 128) greater than 0.2 is changed to 0.2. This resultant feature vector is normalized again. And now you have an illumination independent feature vector!

Summary

You take a 16×16 window of “in-between” pixels around the keypoint. You split that window into sixteen 4×4 windows. From each 4×4 window you generate a histogram of 8 bins. Each bin corresponding to 0-44 degrees, 45-89 degrees, etc. Gradient orientations from the 4×4 are put into these bins. This is done for all 4×4 blocks. Finally, you normalize the 128 values you get.

To solve a few problems, you subtract the keypoint’s orie ntation and also threshold the value of each element of the feature vector to 0.2 (and normalize again).

SIFT算法原理

3.1.1尺度空间极值检测 尺度空间理论最早出现于计算机视觉领域,当时其目的是模拟图像数据的多尺度特征。随后Koendetink 利用扩散方程来描述尺度空间滤波过程,并由此证明高斯核是实现尺度变换的唯一变换核。Lindeberg ,Babaud 等人通过不同的推导进一步证明高斯核是唯一的线性核。因此,尺度空间理论的主要思想是利用高斯核对原始图像进行尺度变换,获得图像多尺度下的尺度空间表示序列,对这些序列进行尺度空间特征提取。二维高斯函数定义如下: 222()/221 (,,)2x y G x y e σσπσ-+= (5) 一幅二维图像,在不同尺度下的尺度空间表示可由图像与高斯核卷积得到: (,,(,,)*(,)L x y G x y I x y σσ)= (6) 其中(x,y )为图像点的像素坐标,I(x,y )为图像数据, L 代表了图像的尺度空间。σ称为尺度空间因子,它也是高斯正态分布的方差,其反映了图像被平滑的程度,其值越小表征图像被平滑程度越小,相应尺度越小。大尺度对应于图像的概貌特征,小尺度对应于图像的细节特征。因此,选择合适的尺度因子平滑是建立尺度空间的关键。 在这一步里面,主要是建立高斯金字塔和DOG(Difference of Gaussian)金字塔,然后在DOG 金字塔里面进行极值检测,以初步确定特征点的位置和所在尺度。 (1)建立高斯金字塔 为了得到在不同尺度空间下的稳定特征点,将图像(,)I x y 与不同尺度因子下的高斯核(,,)G x y σ进行卷积操作,构成高斯金字塔。 高斯金字塔有o 阶,一般选择4阶,每一阶有s 层尺度图像,s 一般选择5层。在高斯金字塔的构成中要注意,第1阶的第l 层是放大2倍的原始图像,其目的是为了得到更多的特征点;在同一阶中相邻两层的尺度因子比例系数是k ,则第1阶第2层的尺度因子是k σ,然后其它层以此类推则可;第2阶的第l 层由第一阶的中间层尺度图像进行子抽样获得,其尺度因子是2k σ,然后第2阶的第2层的尺度因子是第1层的k 倍即3 k σ。第3阶的第1层由第2阶的中间层尺度图像进行子抽样获得。其它阶的构成以此类推。 (2)建立DOG 金字塔 DOG 即相邻两尺度空间函数之差,用(,,)D x y σ来表示,如公式(3)所示: (,,)((,,)(,,))*(,)(,,)(,,)D x y G x y k G x y I x y L x y k L x y σσσσσ=-=- (7) DOG 金字塔通过高斯金字塔中相邻尺度空间函数相减即可,如图1所示。在图中,DOG 金字塔的第l 层的尺度因子与高斯金字塔的第l 层是一致的,其它阶也一样。

SIFT算法实现及代码详解

经典算法SIFT实现即代码解释: 以下便是sift源码库编译后的效果图:

为了给有兴趣实现sift算法的朋友提供个参考,特整理此文如下。要了解什么是sift算法,请参考:九、图像特征提取与匹配之SIFT算法。ok,咱们下面,就来利用Rob Hess维护的sift 库来实现sift算法: 首先,请下载Rob Hess维护的sift 库: https://www.360docs.net/doc/1b12836210.html,/hess/code/sift/ 下载Rob Hess的这个压缩包后,如果直接解压缩,直接编译,那么会出现下面的错误提示: 编译提示:error C1083: Cannot open include file: 'cxcore.h': No such file or directory,找不到这个头文件。 这个错误,是因为你还没有安装opencv,因为:cxcore.h和cv.h是开源的OPEN CV头文件,不是VC++的默认安装文件,所以你还得下载OpenCV并进行安装。然后,可以在OpenCV文件夹下找到你所需要的头文件了。 据网友称,截止2010年4月4日,还没有在VC6.0下成功使用opencv2.0的案例。所以,如果你是VC6.0的用户请下载opencv1.0版本。vs的话,opencv2.0,1.0任意下载。 以下,咱们就以vc6.0为平台举例,下载并安装opencv1.0版本、gsl等。当然,你也可以用vs编译,同样下载opencv(具体版本不受限制)、gsl等。 请按以下步骤操作: 一、下载opencv1.0 https://www.360docs.net/doc/1b12836210.html,/projects/opencvlibrary/files/opencv-win/1.0/OpenCV_1.0.exe

SIFT算法英文详解

SIFT: Scale Invariant Feature Transform The algorithm SIFT is quite an involved algorithm. It has a lot going on and can be come confusing, So I’ve split up the entire algorithm into multiple parts. Here’s an outline of what happens in SIFT. Constructing a scale space This is the initial preparation. You create internal representations of the original image to ensure scale invariance. This is done by generating a “scale space”. LoG Approximation The Laplacian of Gaussian is great for finding interesting points (or key points) in an image. But it’s computationally expensive. So we cheat and approximate it using the representation created earlier. Finding keypoints With the super fast approximation, we now try to find key points. These are maxima and minima in the Difference of Gaussian image we calculate in step 2 Get rid of bad key points Edges and low contrast regions are bad keypoints. Eliminating these makes the algorithm efficient and robust. A technique similar to the Harris Corner Detector is used here. Assigning an orientation to the keypoints An orientation is calculated for each key point. Any further calculations are done relative to this orientation. This effectively cancels out the effect of orientation, making it rotation invariant. Generate SIFT features Finally, with scale and rotation invariance in place, one more representation is generated. This helps uniquely identify features. Lets say you have 50,000 features. With this representation, you can easily identify the feature you’re looking for (sa y, a particular eye, or a sign board). That was an overview of the entire algorithm. Over the next few days, I’ll go through each step in detail. Finally, I’ll show you how to implement SIFT in OpenCV! What do I do with SIFT features? After you run through the algorithm, you’ll have SIFT features for your image. Once you have these, you can do whatever you want. Track images, detect and identify objects (which can be partly hidden as well), or whatever you can think of. We’ll get into this later as well. But the catch is, this algorithm is patented. >.< So, it’s good enough for academic purposes. But if you’re looking to make something commercial, look for something else! [Thanks to aLu for pointing out SURF is patented too] 1. Constructing a scale space Real world objects are meaningful only at a certain scale. You might see a sugar cube perfectly on a table. But if looking at the entire milky way, then it simply does not exist. This multi-scale nature of objects is quite common in nature. And a scale space attempts to replicate this concept

SIFT 特征提取算法详解

SIFT 特征提取算法总结 主要步骤 1)、尺度空间的生成; 2)、检测尺度空间极值点; 3)、精确定位极值点; 4)、为每个关键点指定方向参数; 5)、关键点描述子的生成。 L(x,y,σ), σ= 1.6 a good tradeoff

D(x,y,σ), σ= 1.6 a good tradeoff

关于尺度空间的理解说明:图中的2是必须的,尺度空间是连续的。在 Lowe 的论文中, 将第0层的初始尺度定为1.6,图片的初始尺度定为0.5. 在检测极值点前对原始图像的高斯平滑以致图像丢失高频信息,所以Lowe 建议在建立尺度空间前首先对原始图像长宽扩展一倍,以保留原始图像信息,增加特征点数量。尺度越大图像越模糊。 next octave 是由first octave 降采样得到(如2) , 尺度空间的所有取值,s为每组层数,一般为3~5 在DOG尺度空间下的极值点 同一组中的相邻尺度(由于k的取值关系,肯定是上下层)之间进行寻找

在极值比较的过程中,每一组图像的首末两层是无法进行极值比较的,为了满足尺度 变化的连续性,我们在每一组图像的顶层继续用高斯模糊生成了 3 幅图像, 高斯金字塔有每组S+3层图像。DOG金字塔每组有S+2层图像.

If ratio > (r+1)2/(r), throw it out (SIFT uses r=10) 表示DOG金字塔中某一尺度的图像x方向求导两次 通过拟和三维二次函数以精确确定关键点的位置和尺度(达到亚像素精度)?

直方图中的峰值就是主方向,其他的达到最大值80%的方向可作为辅助方向 Identify peak and assign orientation and sum of magnitude to key point The user may choose a threshold to exclude key points based on their assigned sum of magnitudes. 利用关键点邻域像素的梯度方向分布特性为每个关键点指定方向参数,使算子具备 旋转不变性。以关键点为中心的邻域窗口内采样,并用直方图统计邻域像素的梯度 方向。梯度直方图的范围是0~360度,其中每10度一个柱,总共36个柱。随着距中心点越远的领域其对直方图的贡献也响应减小.Lowe论文中还提到要使用高斯函 数对直方图进行平滑,减少突变的影响。

SIFT算法C语言逐步实现详解

SIFT算法C语言逐步实现详解(上) 引言: 在我写的关于sift算法的前倆篇文章里头,已经对sift算法有了初步的介绍:九、图像特征提取与匹配之SIFT算法,而后在:九(续)、sift算法的编译与实现里,我也简单记录下了如何利用opencv,gsl等库编译运行sift程序。 但据一朋友表示,是否能用c语言实现sift算法,同时,尽量不用到opencv,gsl等第三方库之类的东西。而且,Rob Hess维护的sift 库,也不好懂,有的人根本搞不懂是怎么一回事。 那么本文,就教你如何利用c语言一步一步实现sift算法,同时,你也就能真正明白sift算法到底是怎么一回事了。 ok,先看一下,本程序最终运行的效果图,sift 算法分为五个步骤(下文详述),对应以下第二--第六幅图:

sift算法的步骤 要实现一个算法,首先要完全理解这个算法的原理或思想。咱们先来简单了解下,什么叫sift算法: sift,尺度不变特征转换,是一种电脑视觉的算法用来侦测与描述影像中的局部性特征,它在空间尺度中寻找极值点,并提取出其位置、尺度、旋转不变量,此算法由David Lowe 在1999年所发表,2004年完善总结。 所谓,Sift算法就是用不同尺度(标准差)的高斯函数对图像进行平滑,然后比较平滑后图像的差别, 差别大的像素就是特征明显的点。 以下是sift算法的五个步骤: 一、建立图像尺度空间(或高斯金字塔),并检测极值点 首先建立尺度空间,要使得图像具有尺度空间不变形,就要建立尺度空间,sift算法采用了高斯函数来建立尺度空间,高斯函数公式为:

上述公式G(x,y,e),即为尺度可变高斯函数。 而,一个图像的尺度空间L(x,y,e) ,定义为原始图像I(x,y)与上述的一个可变尺度的2维高斯函数G(x,y,e) 卷积运算。 即,原始影像I(x,y)在不同的尺度e下,与高斯函数G(x,y,e)进行卷积,得到L(x,y,e),如下: 以上的(x,y)是空间坐标,e,是尺度坐标,或尺度空间因子,e的大小决定平滑程度,大尺度对应图像的概貌特征,小尺度对应图像的细节特征。大的e值对应粗糙尺度(低分辨率),反之,对应精细尺度(高分辨率)。 尺度,受e这个参数控制的表示。而不同的L(x,y,e)就构成了尺度空间,具体计算的时候,即使连续的高斯函数,都被离散为(一般为奇数大小)(2*k+1) *(2*k+1)矩阵,来和数字图像进行卷积运算。 随着e的变化,建立起不同的尺度空间,或称之为建立起图像的高斯金字塔。 但,像上述L(x,y,e) = G(x,y,e)*I(x,y)的操作,在进行高斯卷积时,整个图像就要遍历所有的像素进行卷积(边界点除外),于此,就造成了时间和空间上的很大浪费。 为了更有效的在尺度空间检测到稳定的关键点,也为了缩小时间和空间复杂度,对上述的操作作了一个改建:即,提出了高斯差分尺度空间(DOG scale-space)。利用不同尺度的高斯差分与原始图像I(x,y)相乘,卷积生成。 DOG算子计算简单,是尺度归一化的LOG算子的近似。 ok,耐心点,咱们再来总结一下上述内容: 1、高斯卷积 在组建一组尺度空间后,再组建下一组尺度空间,对上一组尺度空间的最后一幅图像进行二分之一采样,得到下一组尺度空间的第一幅图像,然后进行像建立第一组尺度空间那样的操作,得到第二组尺度空间,公式定义为 L(x,y,e) = G(x,y,e)*I(x,y)

SIFT算法分析

SIFT算法分析 1 SIFT 主要思想 SIFT算法是一种提取局部特征的算法,在尺度空间寻找极值点,提取位置,尺度,旋转不变量。 2 SIFT 算法的主要特点: a)SIFT特征是图像的局部特征,其对旋转、尺度缩放、亮度变化保持不变性,对视角变化、仿射变换、噪声也保持一定程度的稳定性。 b)独特性(Distinctiveness)好,信息量丰富,适用于在海量特征数据库中进 行快速、准确的匹配。 c)多量性,即使少数的几个物体也可以产生大量SIFT特征向量。 d)高速性,经优化的SIFT匹配算法甚至可以达到实时的要求。 e)可扩展性,可以很方便的与其他形式的特征向量进行联合。 3 SIFT 算法流程图:

4 SIFT 算法详细 1)尺度空间的生成 尺度空间理论目的是模拟图像数据的多尺度特征。 高斯卷积核是实现尺度变换的唯一线性核,于是一副二维图像的尺度空间定义为: L( x, y, ) G( x, y, ) I (x, y) 其中G(x, y, ) 是尺度可变高斯函数,G( x, y, ) 2 1 2 y2 (x ) 2 e / 2 2 (x,y)是空间坐标,是尺度坐标。大小决定图像的平滑程度,大尺度对应图像的概貌特征,小尺度对应图像的细节特征。大的值对应粗糙尺度(低分辨率),反之,对应精细尺度(高分辨率)。 为了有效的在尺度空间检测到稳定的关键点,提出了高斯差分尺度空间(DOG scale-space)。利用不同尺度的高斯差分核与图像卷积生成。 D( x, y, ) (G( x, y,k ) G( x, y, )) I ( x, y) L( x, y,k ) L( x, y, ) DOG算子计算简单,是尺度归一化的LoG算子的近似。图像金字塔的构建:图像金字塔共O组,每组有S层,下一组的图像由上一 组图像降采样得到。 图1由两组高斯尺度空间图像示例金字塔的构建,第二组的第一副图像由第一组的第一副到最后一副图像由一个因子2降采样得到。图2 DoG算子的构建: 图1 Two octaves of a Gaussian scale-space image pyramid with s =2 intervals. The first image in the second octave is created by down sampling to last image in the previous

sift算法详解

尺度不变特征变换匹配算法详解 Scale Invariant Feature Transform(SIFT) Just For Fun 张东东zddmail@https://www.360docs.net/doc/1b12836210.html, 对于初学者,从David G.Lowe的论文到实现,有许多鸿沟,本文帮你跨越。 1、SIFT综述 尺度不变特征转换(Scale-invariant feature transform或SIFT)是一种电脑视觉的算法用来侦测与描述影像中的局部性特征,它在空间尺度中寻找极值点,并提取出其位置、尺度、旋转不变量,此算法由David Lowe在1999年所发表,2004年完善总结。 其应用范围包含物体辨识、机器人地图感知与导航、影像缝合、3D模型建立、手势辨识、影像追踪和动作比对。 此算法有其专利,专利拥有者为英属哥伦比亚大学。 局部影像特征的描述与侦测可以帮助辨识物体,SIFT特征是基于物体上的一些局部外观的兴趣点而与影像的大小和旋转无关。对于光线、噪声、些微视角改变的容忍度也相当高。基于这些特性,它们是高度显著而且相对容易撷取,在母数庞大的特征数据库中,很容易辨识物体而且鲜有误认。使用SIFT特征描述对于部分物体遮蔽的侦测率也相当高,甚至只需要3个以上的SIFT物体特征就足以计算出位置与方位。在现今的电脑硬件速度下和小型的特征数据库条件下,辨识速度可接近即时运算。SIFT特征的信息量大,适合在海量数据库中快速准确匹配。 SIFT算法的特点有: 1.SIFT特征是图像的局部特征,其对旋转、尺度缩放、亮度变化保持不变性,对视角变化、仿射变换、噪声也保持一定程度的稳定性; 2.独特性(Distinctiveness)好,信息量丰富,适用于在海量特征数据库中进行快速、准确的匹配; 3.多量性,即使少数的几个物体也可以产生大量的SIFT特征向量; 4.高速性,经优化的SIFT匹配算法甚至可以达到实时的要求;

SIFT算法与RANSAC算法分析

概率论问题征解报告: (算法分析类) SIFT算法与RANSAC算法分析 班级:自23 姓名:黄青虬 学号:2012011438 作业号:146

SIFT 算法是用于图像匹配的一个经典算法,RANSAC 算法是用于消除噪声的算法,这两者经常被放在一起使用,从而达到较好的图像匹配效果。 以下对这两个算法进行分析,由于sift 算法较为复杂,只重点介绍其中用到的概率统计概念与方法——高斯卷积及梯度直方图,其余部分只做简单介绍。 一. SIFT 1. 出处:David G. Lowe, The Proceedings of the Seventh IEEE International Conference on (Volume:2, Pages 1150 – 1157), 1999 2. 算法目的:提出图像特征,并且能够保持旋转、缩放、亮度变化保持不变性,从而 实现图像的匹配 3. 算法流程图: 原图像 4. 算法思想简介: (1) 特征点检测相关概念: ◆ 特征点:Sift 中的特征点指十分突出、不会因亮度而改变的点,比如角点、边 缘点、亮区域中的暗点等。特征点有三个特征:尺度、空间和大小 ◆ 尺度空间:我们要精确表示的物体都是通过一定的尺度来反映的。现实世界的 物体也总是通过不同尺度的观察而得到不同的变化。尺度空间理论最早在1962年提出,其主要思想是通过对原始图像进行尺度变换,获得图像多尺度下的尺度空间表示序列,对这些序列进行尺度空间主轮廓的提取,并以该主轮廓作为一种特征向量,实现边缘、角点检测和不同分辨率上的特征提取等。尺度空间中各尺度图像的模糊程度逐渐变大,能够模拟人在距离目标由近到远时目标在视网膜上的形成过程。尺度越大图像越模糊。 ◆ 高斯模糊:高斯核是唯一可以产生多尺度空间的核,一个图像的尺度空间,L (x,y,σ) ,定义为原始图像I(x,y)与一个可变尺度的2维高斯函数G(x,y,σ) 卷积运算 高斯函数: 高斯卷积的尺度空间: 不难看到,高斯函数与正态分布函数有点类似,所以在计算时,我们也是 ()()() ,,,,*,L x y G x y I x y σσ=()22221 ()(),,exp 22i i i i x x y y G x y σπσσ??-+-=- ? ??

SIFT算法和卷积神经网络算法在图像检索领域的应用分析

SIFT算法和卷积神经网络算法在图像检索领域的应用分析 1、引言 基于内容的图像检索是由于图像信息的飞速膨胀而得到关注并被提出来的。如何快速准确地提取图像信息内容是图像信息检索中最为关键的一步。传统图像信息检索系统多利用图像的底层特征,如颜色、纹理、形状以及空间关系等。这些特征对于图像检索有着不同的结果,但是同时也存在着不足,例如:颜色特征是一种全局的特征,它对图像或图像区域的方向、大小等变化不敏感,所以颜色特征不能很好的捕捉图像中对象的局部特征,也不能表达颜色空间分布的信息。纹理特征也是一种全局特征,它只是物体表面的一种特性,并不能完全反映物体的本质属性。基于形状的特征常常可以利用图像中感兴趣的目标进行检索,但是形状特征的提取,常常受到图像分割效果的影响。空间关系特征可以加强对图像内容的描述和区分能力,但空间关系特征对图像或者,目标的旋转、平移、尺度变换等比较敏感,并且不能准确地表达场景的信息。图像检索领域急需一种能够对目标进行特征提取,并且对图像目标亮度、旋转、平移、尺度甚至仿射不变的特征提取算法。 2、SIFT特征 SIFT(Scale-Invariant Feature Transform,尺度不变特征转换)是一种电脑视觉的算法,用来侦测与描述影像中的局部性特征,它在空间尺度中寻找极值点,并提取出其位置、尺度、旋转不变量,此算法由David Lowe在1999年所发表,2004年完善总结。 局部特征的描述与侦测可以帮助辨识物体,SIFT特征是基于物体上的一些局部外观的兴趣点,与目标的大小和旋转无关,对于光线、噪声、些微视角改变的容忍度也相当高。使用SIFT特征描述对于部分物体遮蔽的侦测成功率也相当高,甚至只需要3个以上的SIFT 物体特征就足以计算出位置与方位。在现今的电脑硬件速度和小型的特征数据库条件下,辨识速度可接近即时运算。SIFT特征的信息量大,也适合在海量数据库中快速准确匹配。 SIFT算法的特点有: (1)SIFT特征是图像的局部特征,其对旋转、尺度缩放、亮度变化保持不变形,是非常稳定的局部特征,现在应用非常广泛。(仿射变换,又称仿射映射,是指在几何中,一个向量空间进行一次线性变换并加上一个平移,变换为另一个向量空间。) (2)独特性(Distinctiveness)好,信息量丰富,适用于在海量特征数据库中进行快速、准确的匹配; (3)多量性,即使少数的几个物体也可以产生大量的SIFT特征向量; (4)高速性,经优化的SIFT匹配算法甚至可以达到实时的要求; (5)可扩展性,可以很方便的与其他形式的特征向量进行联合。 SIFT算法可以解决的问题:目标的自身状态、场景所处的环境和成像器材的成像特性等因素影响图像配准/目标识别跟踪的性能。 而SIFT算法在一定程度上可解决:

SIFT算法实现原理步骤

SIFT 算法实现步骤 :1 关键点检测、2 关键点描述、3 关键点匹配、4 消除错配点 1关键点检测 1.1 建立尺度空间 根据文献《Scale-space theory: A basic tool for analysing structures at different scales 》我们可知,高斯核是唯一可以产生多尺度空间的核,一个图像的尺度空间,L (x,y,σ) ,定义为原始图像I(x,y)与一个可变尺度的2维高斯函数G(x,y,σ) 卷积运算。 高斯函数 高斯金字塔 高斯金子塔的构建过程可分为两步: (1)对图像做高斯平滑; (2)对图像做降采样。 为了让尺度体现其连续性,在简单 下采样的基础上加上了高斯滤波。 一幅图像可以产生几组(octave ) 图像,一组图像包括几层 (interval )图像。 高斯图像金字塔共o 组、s 层, 则有: σ——尺度空间坐标;s ——sub-level 层坐标;σ0——初始尺度;S ——每组层数(一般为3~5)。 当图像通过相机拍摄时,相机的镜头已经对图像进行了一次初始的模糊,所以根据高斯模糊的性质: -第0层尺度 --被相机镜头模糊后的尺度 高斯金字塔的组数: M 、N 分别为图像的行数和列数 高斯金字塔的组内尺度与组间尺度: 组内尺度是指同一组(octave )内的尺度关系,组内相邻层尺度化简为: 组间尺度是指不同组直接的尺度关系,相邻组的尺度可化为: 最后可将组内和组间尺度归为: ()22221 ()(),,exp 22i i i i x x y y G x y σπσσ??-+-=- ? ??()()(),,,,*,L x y G x y I x y σσ=Octave 1 Octave 2 Octave 3 Octave 4 Octave 5σ2σ 4σ8 σ 0()2s S s σσ= g 0σ=init σpre σ()() 2log min ,3O M N ??=-?? 1 12S s s σσ+=g 1()2s S S o o s σσ++=g 222s S s S S o o σσ+=g g 121 2(,,,) i n k k k σσσσ--L 1 2 S k =

遥感图像处理在汶川地震中的应用分析

遥感图像处理在汶川地震中的应用分析 摘要 随着卫星技术的快速发展,遥感技术被越来越广泛的应用于国民经济的各个方面。本文结合汶川地震中遥感技术的应用实例,系统阐述了遥感应用于应急系统中需要解决的一系列关键技术问题。并就数据获取、薄云去除、图像镶嵌、图像解译,以及灾后重建中的若干关键技术问题展开了分析。关键词:遥感;地震;应用;关键技术 1 引言 长期以来,人们不断遭受到各种自然灾害的侵害,如地震、火山、洪水等,同时,由人为因素导致的灾难也不断发生,如火灾、恐怖袭击等。这些灾害具备破坏性、突发性、连锁性、难预报性等特点,往往容易造成重大的人员伤亡和巨大的财产损失。为了有效的应对突发事件,产生了各类应急系统。 灾区数据的实时获取足所有应急系统的基础。对于区域性的灾害,传统的地面调查方式,由于速度慢、面积小、需要人员现场勘查等无法避免的特点,很难满足应急系统的需要。相对而言,遥感技术有其得天独厚的优势:遥感传感器能实时的、大面积的、无接触的获取灾区数据,因此成为绝大多数应急系统中数据获取的主要手段。为了使遥感数据能满足应急系统中基础数据的要求,需要经过数据获取、数据预处理、图像解译等阶段的处理,最终提取出准确的遥感信息。下面将根据这三个阶段的处理技术展开阐述与分析,并以汶川地震为例,介绍遥感技术在应急救灾及灾后重建中的应用。 2 数据获取 灾害发生后,由于地形、气象等客观因素的影响,通过单一的遥感传感器往往很难获得灾区所有数据,需要充分发挥多种传感器的优势,获取灾区的各种类型数据,主要包括光学与SAR卫星遥感影像、光学与SAR航空遥感影像两大类。 2.1 光学与SAR卫星遥感影像的获取 此类数据包括国内外的众多高分辨率光学与SAR卫星遥感影像。从时间上说,重点是灾害发生前后数据的获取,以快速确定灾区的位置和前后的变化。 2.2 光学与SAR航空遥感影像的获取 此类数据是利用高空遥感琶机、无人机和卣升机等高、低空遥感平台,搭载遥感传感器,快速

SIFT特征提取分析

SIFT(Scale-invariant feature transform)是一种检测局部特征的算法,该算法通过求一幅图中的特征点(interest points, or corner points)及其有关scale 和orientation 的描述子得到特征并进行图像特征点匹配,获得了良好效果,详细解析如下: 算法描述 SIFT特征不只具有尺度不变性,即使改变旋转角度,图像亮度或拍摄视角,仍然能够得到好的检测效果。整个算法分为以下几个部分: 1. 构建尺度空间 这是一个初始化操作,尺度空间理论目的是模拟图像数据的多尺度特征。 高斯卷积核是实现尺度变换的唯一线性核,于是一副二维图像的尺度空间定义为: 其中G(x,y,σ) 是尺度可变高斯函数 (x,y)是空间坐标,是尺度坐标。σ大小决定图像的平滑程度,大尺度对应图像的概貌特征,小尺度对应图像的细节特征。大的σ值对应粗糙尺度(低分辨率),反之,对应精细尺度(高分辨率)。为了有效的在尺度空间检测到稳定的关键点,提出了高斯差分尺度空间(DOG scale-space)。利用不同尺度的高斯差分核与图像卷积生成。 下图所示不同σ下图像尺度空间:

关于尺度空间的理解说明:2kσ中的2是必须的,尺度空间是连续的。在 Lowe的论文中,将第0层的初始尺度定为1.6(最模糊),图片的初始尺度定为0.5(最清晰). 在检测极值点前对原始图像的高斯平滑以致图像丢失高频信息,所以Lowe 建议在建立尺度空间前首先对原始图像长宽扩展一倍,以保留原始图像信息,增加特征点数量。尺度越大图像越模糊。 图像金字塔的建立:对于一幅图像I,建立其在不同尺度(scale)的图像,也成为子八度(octave),这是为了scale-invariant,也就是在任何尺度都能够有对应的特征点,第一个子八度的scale为原图大小,后面每个octave为上一个octave降采样的结果,即原图的1/4(长宽分别减半),构成下一个子八度(高一层金字塔)。

sift算法的MATLAB程序

% [image, descriptors, locs] = sift(imageFile) % % This function reads an image and returns its SIFT keypoints. % Input parameters: % imageFile: the file name for the image. % % Returned: % image: the image array in double format % descriptors: a K-by-128 matrix, where each row gives an invariant % descriptor for one of the K keypoints. The descriptor is a vector % of 128 values normalized to unit length. % locs: K-by-4 matrix, in which each row has the 4 values for a % keypoint location (row, column, scale, orientation). The % orientation is in the range [-PI, PI] radians. % % Credits: Thanks for initial version of this program to D. Alvaro and % J.J. Guerrero, Universidad de Zaragoza (modified by D. Lowe) function [image, descriptors, locs] = sift(imageFile) % Load image image = imread(imageFile); % If you have the Image Processing Toolbox, you can uncomment the following % lines to allow input of color images, which will be converted to grayscale. % if isrgb(image) % image = rgb2gray(image); % end [rows, cols] = size(image); % Convert into PGM imagefile, readable by "keypoints" executable f = fopen('tmp.pgm', 'w'); if f == -1 error('Could not create file tmp.pgm.'); end fprintf(f, 'P5\n%d\n%d\n255\n', cols, rows); fwrite(f, image', 'uint8'); fclose(f); % Call keypoints executable if isunix command = '!./sift '; else command = '!siftWin32 '; end command = [command '

深度解析:移动机器人的几种视觉算法

深度解析:移动机器人的几种视觉算法谈到移动机器人,大家第一印象可能是服务机器人,实际上无人驾驶汽车、可自主飞行的无人机等等都属于移动机器人范畴。它们能和人一样能够在特定的环境下自由行走/飞行,都依赖于各自的定位导航、路径规划以及避障等功能,而视觉算法则是实现这些功能关键技术。 如果对移动机器人视觉算法进行拆解,你就会发现获取物体深度信息、定位导航以及壁障等都是基于不同的视觉算法,本文就带大家聊一聊几种不同但又必不可少的视觉算法组成。 本文作者陈子冲,系Segway Robot架构师和算法负责人。 移动机器人的视觉算法种类 Q:实现定位导航、路径规划以及避障,那么这些过程中需要哪些算法的支持? 谈起移动机器人,很多人想到的需求可能是这样的:“嘿,你能不能去那边帮我拿一杯热拿铁过来。”这个听上去对普通人很简单的任务,在机器人的世界里,却充满了各种挑战。为了完成这个任务,机器人首先需要载入周围环境的地图,精确定位自己在地图中的位置,然后根据地图进行路径规划控制自己完成移动。 而在移动的过程中,机器人还需要根据现场环境的三维深度信息,实时的躲避障碍物直至到达最终目标点。在这一连串机器人的思考过程中,可以分解为如下几部分的视觉算法: 1.深度信息提取 2.视觉导航 3.视觉避障 后面我们会详细说这些算法,而这些算法的基础,是机器人脑袋上的视觉传感器。 视觉算法的基础:传感器 Q:智能手机上的摄像头可以作为机器人的眼睛吗? 所有视觉算法的基础说到底来自于机器人脑袋上的视觉传感器,就好比人的眼睛和夜间视力非常好的动物相比,表现出来的感知能力是完全不同的。同样的,一个眼睛的动物

对世界的感知能力也要差于两个眼睛的动物。每个人手中的智能手机摄像头其实就可以作为机器人的眼睛,当下非常流行的Pokeman Go游戏就使用了计算机视觉技术来达成AR 的效果。 像上图画的那样,一个智能手机中摄像头模组,其内部包含如下几个重要的组件:镜头,IR filter,CMOS sensor。其中镜头一般由数片镜片组成,经过复杂的光学设计,现在可以用廉价的树脂材料,做出成像质量非常好的手机摄像头。 CMOS sensor上面会覆盖着叫做Bayer三色滤光阵列的滤色片。每个不同颜色的滤光片,可以通过特定的光波波长,对应CMOS感光器件上就可以在不同位置分别获得不同颜色的光强了。如果CMOS传感器的分辨率是4000x3000,为了得到同样分辨率的RGB 彩色图像,就需要用一种叫做demosaicing的计算摄像算法,从2绿1蓝1红的2x2网格中解算出2x2的RGB信息。

SIFT算法分析

SIFT算法分析 1 SI F T主要思想 S IF T算法就是一种提取局部特征得算法,在尺度空间寻找极值点,提取位置,尺度,旋转不变量。 2 SI FT算法得主要特点 a)SIFT特征就是图像得局部特征,其对旋转、尺度缩放、亮度变化保持不变性,对视角变化、仿射变换、噪声也保持一定程度得稳定性。 b )独特性(Dis t in c t iv e n es s)好,信息量丰富,适用于在海量特征数据库中进行快速、准确得匹配? c) 多量性,即使少数得几个物体也可以产生大量S IFT特征向量。 d) 高速性,经优化得SIF T匹配算法甚至可以达到实时得要求。 e) 可扩展性,可以很方便得与其她形式得特征向量进行联合。 3 SI F T算法流程图: 爹尺度空间扱值点检测 特征点的精确定位 I*

特征点的主方向计算 描述子的构造 特征向童I*

4 SIFT 算法详细 1)尺度空间得生成 尺度空间理论目得就是模拟图像数据得多尺度特征 . 高斯卷积核就是实现尺度变换得唯一线性核,于就是一副二维图像得尺度 空间定义为: 其中就是尺度可变高斯函数, (x ,y )就是空间坐标,就是尺度坐标.大小决定图像得平滑程度,大尺度对 应图像得概貌特征,小尺度对应图像得细节特征。大得值对应粗糙尺度 (低分辨 率),反之,对应精细尺度(高分辨率)。 为了有效得在尺度空间检测到稳定得关键点,提出了高斯差分尺度空间 (D O G seal e — s p ace ).利用不同尺度得高斯差分核与图像卷积生成。 D(x,y, ) (G(x, y,k ) G(x, y, )) I(x,y) L(x, y,k ) L(x, y,) D OG 算子计算简单,就是尺度归一化得L o G 算子得近似。 图像金字塔得构建:图像金字塔共O 组,每组有S 层,下一组得图像由上一组图 像降采样得到。 图1由两组高斯尺度空间图像示例金字塔得构建, 第二组得第一副图像由第 一组得第一副到最后一副图像由一个因子 2降采样得到。图2 DoG 算子得构建: t o last image in t h e p revio us 图 1 Tw o o ct a ves of a G aus sia n s cale-spac e i ma g e pyr amid with s = 2 inte r vals 、Th e fir s t image i n t h e sec o nd o e t a ve is created b y dow n sa m p l ing Oj-taw J Octave 聖

OpenCV SIFT特征算法详解与使用

SIFT概述 SIFT特征是非常稳定的图像特征,在图像搜索、特征匹配、图像分类检测等方面应用十分广泛,但是它的缺点也是非常明显,就是计算量比较大,很难实时,所以对一些实时要求比较高的常见SIFT算法还是无法适用。如今SIFT算法在深度学习特征提取与分类检测网络大行其道的背景下,已经越来越有鸡肋的感觉,但是它本身的算法知识还是很值得我们学习,对我们也有很多有益的启示,本质上SIFT算法是很多常见算法的组合与巧妙衔接,这个思路对我们自己处理问题可以带来很多有益的帮助。特别是SIFT特征涉及到尺度空间不变性与旋转不变性特征,是我们传统图像特征工程的两大利器,可以扩展与应用到很多图像特征提取的算法当中,比如SURF、HOG、HAAR、LBP等。夸张一点的说SIFT算法涵盖了图像特征提取必备的精髓思想,从特征点的检测到描述子生成,完成了对图像的准确描述,早期的ImageNet 比赛中,很多图像分类算法都是以SIFT与HOG特征为基础,所有SIFT算法还是值得认真详细解读一番的。SIFT特征提取归纳起来SIFT特征提取主要有如下几步: ?构建高斯多尺度金字塔 ?关键点精准定位与过滤 ?关键点方向指派 ?描述子生成 构建高斯多尺度金字塔 常见的高斯图像金字塔是每层只有一张图像,大致如下: 上述的是通过图像金字塔实现了多分辨率,如果我们在每一层高斯金字塔图像生成的时候,给予不同的sigma值,这样不同的sigam就会产生不同模糊版本的图像,在同一层中就是实现不同尺度的模糊图像,再结合高斯金

字塔,生成多个层多个尺度的金字塔,就是实现了图像的多尺度金字塔。同一张图像不同尺度高斯模糊如下: 为了在每层图像中检测 S 个尺度的极值点,DoG 金字塔每层需 S+2 张图像,因为每组的第一张和最后一张图像上不能检测极值,DoG 金字塔由高斯金字塔相邻两张相减得到,则高斯金字塔每层最少需 S+3 张图像,实际计算时 S 通常在2到5之间。SIFT算法中生成高斯金字塔的规则如下(尺度空间不变性): 关键点精准定位与过滤 对得到的每层DOG图像,计算窗口3x3x3范围除去中心点之外的26点与中心点比较大小,寻找最大值或者最小值(极值点),如下图: